ANALYSIS: Why Apple Just Admitted Google's AI Won

What Apple choosing Google over OpenAI means for your AI strategy (Guest)

TL;DR: Apple announced in January 2026 that Google's Gemini will power the next generation of Siri and Apple Intelligence, replacing OpenAI's ChatGPT as the primary AI partner. The deal includes a custom 1.2 trillion parameter Gemini model running on Apple's Private Cloud Compute infrastructure, meaning user data never touches Google's servers. Rollout begins spring 2026 with iOS 26.4, affecting all Apple Intelligence-compatible devices.

Imagine a restaurant you love. Great food, solid menu, everything made in-house. Then one day, they started making their own bread.

The first loaves were okay. A bit dense, but hey, they were trying. Six months later, the bread got worse. Tough crust, gummy inside. By year two, you didn’t even touch it.

Then, all of a sudden, you walked in, and the bread was perfect! Crusty outside, soft inside, real flavor. You ask the owner what changed, and he tells you:

“We partnered with the best bakery in the city,” he said. “We’re great at what we do. Bread isn’t it.”

That’s what just happened with Apple and Google.

Before we start, I’m curious, what’s your go-to AI tool?

After years of trying to build competitive AI, Apple admitted what many of us already knew: its AI wasn’t working. So they did what great leaders do when something isn’t working. They stopped pretending and partnered with someone who’s actually winning.

On January 12, 2026, Apple announced that Google’s Gemini will power the next generation of Siri and Apple Intelligence. Not ChatGPT. Not their own AI. Google.

This isn’t just a tech story. This is a signal about which AI tools are actually delivering results at scale. Today, Ilia Karelin breaks down what actually happened under the hood. Ilia writes Prosper, an AI newsletter about practical AI systems and workflows, teaching 400+ professionals to think better with AI. He’s Leadership in Change’s first two-time guest author after his excellent breakdown on Perplexity, and he’s back with the technical analysis every leader using Apple products needs to understand.

Sidenote, if you are not familiar with Ilia Karelin’s work, you are missing out! Subscribe below.

In this post, you’ll learn:

Why Apple chose Google’s Gemini over OpenAI after years of partnership discussions

What this strategic partnership reveals about which AI models are winning at scale

How to evaluate AI tools based on performance signals, not brand recognition

Why the AI landscape shifted dramatically in 2025-2026

What this means for leaders using Apple products in their organizations

Here it is…

Google Intelligence Is Coming Your Way In 2026 (If You Own iPhone & Mac)

Before we start, I wanted to throw a couple of jokes I saw under MKBHD Video about this announcement:

Turns out…Apple Intelligence is rebranding into Google Intelligence in 2026.

Here’s another one: So AGI has finally been achieved.. We are calling it APPLE GOOGLE INTELLlGENCE”

Alright, let’s go back to being serious and learn something from this post.

People have been complaining about Siri for years. Apple tried to fix it with putting ChatGPT in, it didn’t work.

But what Apple just did - this might be the smartest move Apple could make.

The Gemini deal announced in January 2026 isn’t just surrender - it’s Apple playing to its strengths. They’re admitting: “We’re world-class at hardware and privacy infrastructure. Let Google handle the AI models.”

For iPhone users, this changes everything. Your Siri might finally work! But you need to understand what’s actually happening under the hood because it’s very interesting.

Note: When we say “iPhone users,” we mean all Apple Intelligence-compatible devices: iPhone 15 Pro or newer, iPad with M1 or newer, and Mac with M1 or newer. The understanding is that The Gemini integration applies across the entire ecosystem - your Siri gets upgraded whether you’re on iPhone, iPad, or Mac.

Why The iPhone Is Still The Best AI Hardware (Even If Siri Not As Good As We Want)

Apple were slow to catch up to AI software. Sure, that’s undeniable.

But their hardware? Unmatched.

The iPhone 15 Pro has a 16-core Neural Engine capable of 17 trillion operations per second. The unified memory architecture means the CPU, GPU, and Neural Engine share the same memory pool—no bottlenecks moving data around.

This matters because AI inference (running AI models to generate responses) is all about moving massive amounts of data through processors quickly. Apple’s tight integration between hardware and software means on-device AI runs faster and more efficiently than on any Android phone.

Apple’s ecosystem advantage compounds this. Your iPhone, Mac, iPad, and Apple Watch share data through iCloud. When you ask Siri something on your iPhone, it can pull context from Calendar, Messages, Photos, and Files synced across devices—though with some limitations. Health data from Apple Watch stays strictly on-device for privacy reasons, and cross-device queries add 1-3 seconds of latency as Siri fetches from iCloud.

Google Pixel phones and Samsung Galaxy devices also have strong AI hardware, but Apple’s 15-year ecosystem integration across iPhone, Mac, iPad, Watch, and more provides unmatched cross-device continuity that neither can fully match.

Integrating Gemini into Siri could be actually an amazing strategy for both.

Apple built the best platform for AI. They just couldn’t build the AI itself. So they’re focusing on what they do best (hardware, integration, privacy infrastructure) and outsourcing the AI models to the one company that has both capability AND strategic alignment with Apple’s existing business.

How AI Actually Runs on Your iPhone

Understanding where AI runs helps you know when your data leaves your phone.

Your iPhone has three ways to handle AI:

1. On your phone - fastest, most private, but limited capability

2. Apple’s secure cloud - smarter, encrypted so Apple/Google can’t see your data

3. Third-party services - ChatGPT/Gemini for specialized tasks

Think of it like cooking: On-device is your microwave (instant but limited). Private Cloud Compute is your full kitchen (takes longer, but can make anything). Third-party is ordering delivery (flexibl,e but data leaves your control).

Layer 1: On-Device Processing

AI runs entirely on your iPhone’s chip. No internet. Complete privacy.

Apple compresses large AI models using quantization (reducing number precision), pruning (removing unimportant connections), and palettization (grouping similar values). The result: a 3 billion parameter model that runs instantly, uses minimal battery, and works offline. In today’s numbers - this model is tiny.

What runs here: Face ID, photo recognition, typing predictions, simple Siri commands (”Set a timer”).

Trade-off: 3 billion parameters is roughly 100x smaller than cloud models. You lose reasoning power but gain speed and privacy.

Layer 2: Private Cloud Compute

When queries are too complex, iOS routes them to Apple’s Private Cloud Compute - custom Apple Silicon servers in Apple’s data centers.

What makes it different: Servers are stateless (process request, send response, wipe everything). End-to-end encryption means even Apple engineers can’t see your queries. Cryptographic attestation verifies genuine Apple software before your phone sends anything.

This is where Gemini will run when it integrates with Siri in 2026. Google’s model, Apple’s infrastructure. Your data never touches Google’s servers.

What runs here: Complex queries, multi-app synthesis, Gemini-powered features (starting spring 2026).

Layer 3: Third-Party Cloud

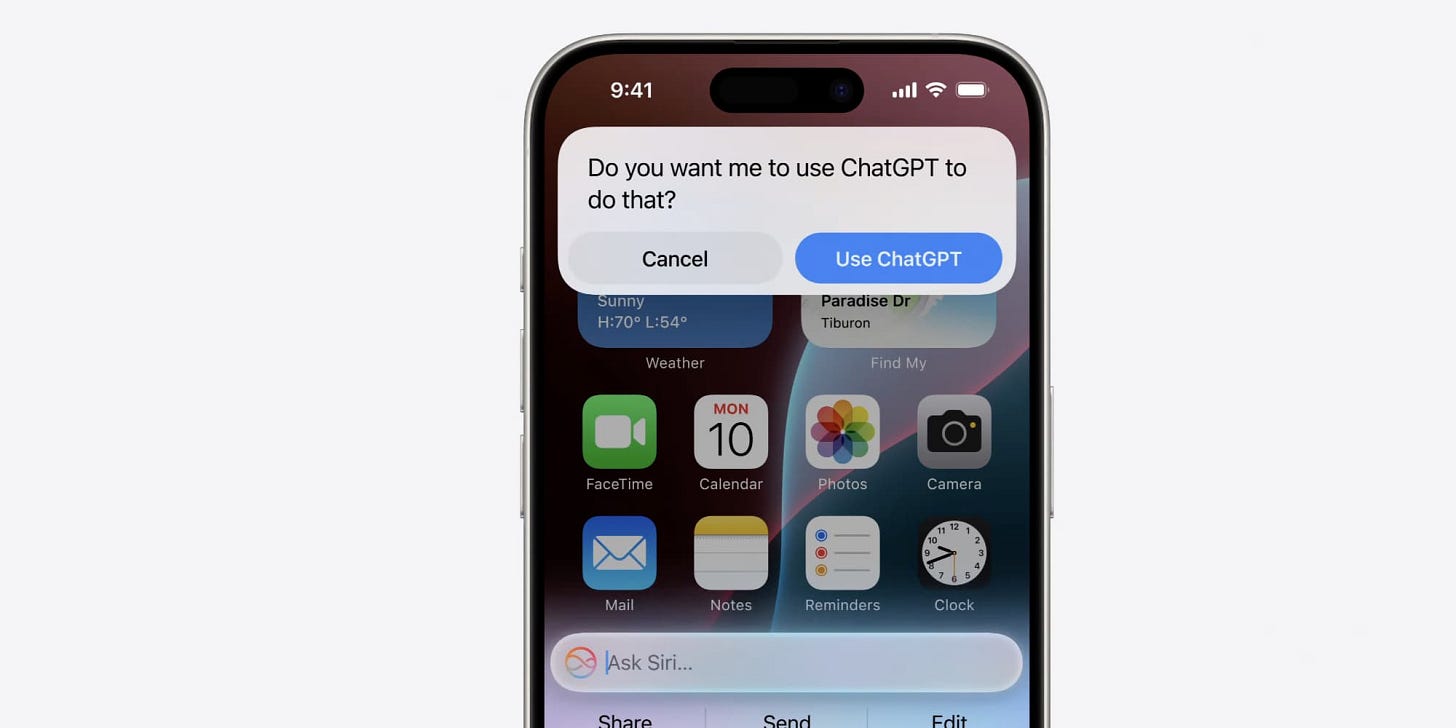

When Apple’s systems can’t handle a request, Siri asks permission to route to ChatGPT. Apple strips identifying information first - OpenAI gets the question but not your name, email, or device ID.

What routes here: Image generation, highly specialized queries, creative writing.

The key insight: Three privacy levels - on-device (zero data leaves phone), Private Cloud Compute (encrypted on Apple servers), third-party (anonymized external request).

Why Apple Couldn’t Build Great AI

Compression isn’t the core problem. Actually, modern quantization retains 90%+ capability when shrinking 100B → 3B models. Apple’s real bottleneck is training data: privacy blocks web-scale datasets, so their 3B-parameter models never had Google’s starting knowledge base to begin with.

Apple’s privacy-first approach meant smaller on-device models. But the bigger challenge was timing.

Apple committed to privacy constraints even before the LLM breakthrough. By the time ChatGPT proved conversational AI was possible, Apple’s architecture was built around different assumptions. Even in 2021, Apple continues to helping customers control their data (Link).

The Gemini deal is Apple’s pragmatic response: leverage their hardware and privacy infrastructure, partner for the AI models from the best. It’s a strategic pivot that plays to strengths rather than trying to catch up in an area where Google has multi-year advantages.

Does ChatGPT Gets Used As Much As We Think?

Despite seeming like a logical fix, ChatGPT through Siri hasn’t gained significant traction.

Apple insiders report that real-world usage of Apple Intelligence features remains low, with the ChatGPT integration “buried fairly deep in the background of most Siri queries.” The infrastructure tells the story: Siri handles approximately 830 million daily queries compared to ChatGPT’s 210 million. The integration hasn’t driven that kind of traffic increase.

Three possible reasons why:

It’s slower than using the ChatGPT app directly. Routing through Apple’s anonymization layer adds latency. The ChatGPT app is faster.

No conversation persistence. You ask a question, get an answer, that’s it. There’s no conversation thread like ChatGPT’s native app provides. You can’t easily say “tell me more about that” or “now explain it for a 10-year-old.”

Permission friction. Every complex query prompts “Allow ChatGPT?” The first few times, this feels like good privacy practice. By the tenth time, it’s annoying. Power users just open the ChatGPT app instead.

The Gemini Deal Explained (What’s Actually Happening)

On January 12, 2026, Apple and Google announced a multi-year partnership: Google’s Gemini models would power Apple’s next generation of AI features.

Here’s what’s actually happening under the hood.

The Deal Structure

Google is building a custom 1.2 trillion parameter Gemini model specifically for Apple. That’s roughly 400 times larger than Apple’s on-device models and competitive with the largest models Google and OpenAI run.

Most tasks will probably still run through Apple’s created LLM (the small ones I mentioned). But more complex tasks will go to Gemini.

Your data never touches Google’s servers. Queries route through Apple’s infrastructure.

The estimated cost is roughly $1 billion spread across multiple years. Compare that to the $20 billion per year Google pays Apple to be Safari’s default search engine. This is a rounding error on their existing financial relationship.

Timeline and Features

Spring 2026: iOS 26.4/March launch

WWDC June 2026: Advanced features preview

iPhone 17 (Fall 2026): Full rollout

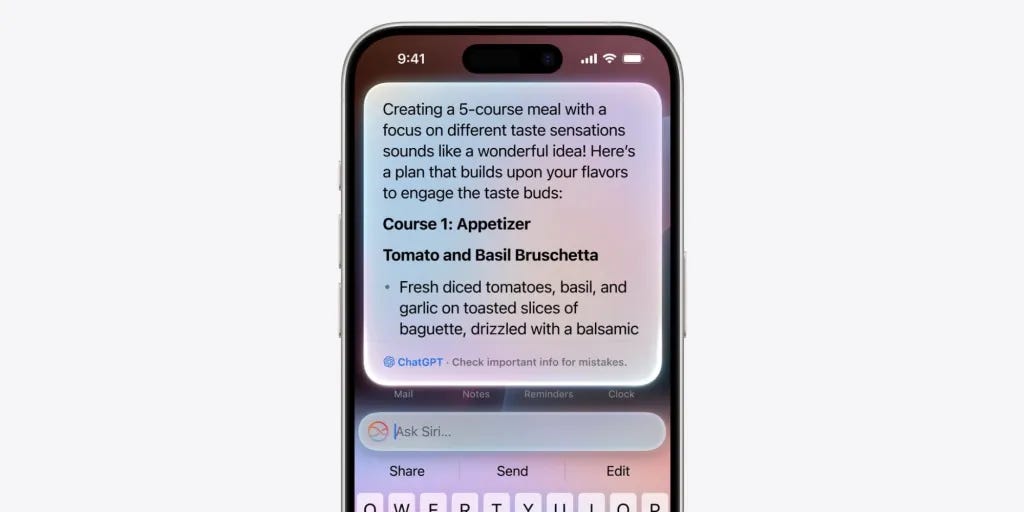

What Changes For You (if you own iPhone)

Gemini-powered Siri will handle things current Siri can’t:

Ambiguous requests with context: “Text my boss about the meeting” → Siri understands which meeting from your calendar, which contact is your boss, and what you discussed last time (Link).

Multi-app synthesis: “What’s my schedule this week and should I adjust my workout plan given my workload?” → Combines Calendar, Health, and Reminders data to give comprehensive answers (Link).

Content generation: Writing emails, drafting documents, creating responses in your voice (Link).

Emotional intelligence (rumor): Recognizing when you’re stressed or asking for help with something sensitive and responding with appropriate empathy (Link).

Limited conversations (rumor): You can ask follow-up questions and Siri maintains context within a session (Link).

These capabilities exist on other platforms, but only as standalone apps (Like Google Gemini itself). Apple’s integration means Siri becomes the default interface for everything. You won’t need to open separate apps.

Why Google Over ChatGPT?

3 strategic reasons:

1. Search integration. Gemini is intrinsically connected to Google Search - trillions of indexed pages, real-time web understanding, constantly updated information. For a voice assistant answering factual questions, this is a massive advantage.

2. The $20 billion relationship. Google pays Apple $20 billion per year to be Safari’s default search engine. This is Google’s most important distribution deal and Apple’s single largest source of services revenue.

3. Performance. Gemini excels at real-time knowledge through Google Search integration and responds significantly faster (5 seconds vs 25 seconds for web lookups). While ChatGPT leads in complex reasoning and conversational quality, Gemini’s speed and live search capabilities make it better suited for quick voice assistant queries - and this is what I personally would use new Siri for.

OpenAI spent considerable resources integrating with Apple, believing it would be primary distribution to hundreds of millions of iPhone users. I remember people were freaking out about this, saying that OpenAI is about to exponentially increase their distribution. Instead, they’re relegated to secondary status.

What This Means for You

The good news: Your Siri will finally work when Gemini rolls out in spring 2026. Complex queries get conversational answers instead of “I don’t understand.” No separate apps needed - Siri handles it using Apple’s best-in-class hardware and privacy infrastructure (hopefully).

The trade-offs: More queries leave your device (encrypted through Private Cloud Compute). Full features likely require iPhone 15 Pro or newer. Apple-Google entanglement deepens.

This deal raises bigger questions about who controls AI infrastructure across the industry.

The Bigger Picture

This isn’t just about Siri. It’s about who controls AI infrastructure for the next decade. Do you know how many people own iPhone in the world? Exactly.

Google will power AI on both Android AND iOS. Gemini runs Samsung’s Galaxy AI and will power Apple’s Siri. Hopefully, not too much data will be going over to Google, but this still gives Google unprecedented data about how humans interact with AI and leverage over competitors.

Thanks, Ilia!

The Bottom Line

Apple couldn’t build competitive AI despite a decade of trying. The Gemini deal is them playing to their strengths: world-class hardware and privacy infrastructure, with Google’s AI models running on top. Gemini will be staying under the hood this time, and Siri will be at the front of it all.

For iPhone & Mac users: Your Siri will finally work when Gemini rolls out in spring 2026. You get Google’s AI capability with Apple’s privacy protections.

The AI assistant you’ve wanted on your iPhone for 15 years is finally coming. Just not the way anyone expected.

The world’s best hardware maker just told you which AI actually works. Apple spent years trying to build competitive AI, failed, and chose Google over OpenAI. That’s not a tech decision. That’s a performance signal.

The AI landscape shifted. ChatGPT dominated 2023, but Claude and Gemini caught up in 2025-2026. I switched completely to Claude because it delivers better results for complex analysis and strategic thinking. Apple chose Gemini for speed and search integration. Both choices signal the same thing: performance matters more than hype.

If you lead with Apple products, your tools improve dramatically starting spring 2026. More importantly, you just learned how to read strategic partnerships when evaluating AI for your organization.

If You Only Remember This:

When the world’s best hardware maker admits their AI doesn’t work and partners with a competitor, that’s your signal about which models are actually winning

Apple chose Google’s Gemini over OpenAI after years of discussions—performance-based, not rushed

The AI landscape shifted in 2025-2026: Claude and Gemini caught up to ChatGPT and pulled ahead in specific areas

Question for you: If you use Apple products daily and Siri suddenly works as well as Claude or Gemini starting spring 2026, does that change which AI tool you default to?

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Partner and Connect

I love connecting with people. Please use the following connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

Fantastic break down and contextual explanation Ilia. For me this is going to be the death knell for xAI. Will also be interesting to see what it does to OpenAI's share prices and how this impacts their capacity to raise the capital they so desperately need to stay afloat...

Such a great analysis! The decision to integrate Gemini really makes sense, since it allows Apple to focus on building great hardware. I'm sure they’ll ensure that their custom version of Gemini has a distinctly Apple feel. I also think most user queries will be simple enough to run on-device or on Apple servers, since most people aren’t making highly complex requests.