The Empathy Gap in AI Implementation

Closing the Gap Between AI and Your Team (Guest)

Thanks for reading! To access $500+ of value through our community, full prompt library, coaching, and AI tools saving leaders 5-10 hours per week, check out our Premium Hub.

“The AI tool will fix that.”

I’ve heard this exact phrase at least fifty times this year. And every single time, I ask the same question: “Fix what, exactly, and how?”

This is the pattern I’ve watched play out all year.

Leaders buy the tool

Deploy the tool

Wonder why nobody’s using the tool… 🫣

MIT research found that only 5% of corporate AI pilots actually deliver real business value. That means 95% show no measurable impact. As we close out 2025, that statistic should make every leader pause.

Colette Molteni gets why this happens. She founded Empathy Elevated, where she helps tech and business leaders develop emotional intelligence in an AI-driven world. I’ve been a fan of her work on innovation and empathy for some time now and wanted to introduce her to each of you.

I invited Colette Molteni to write this because as we head into 2026, leaders are making New Year plans for AI adoption… and most of those plans will fail for the same reason they failed this year: they’re missing the human element.

The gap isn’t technical. It’s empathy.

In this post, you’ll learn:

Why 95% of AI implementations fail (and it’s not the technology)

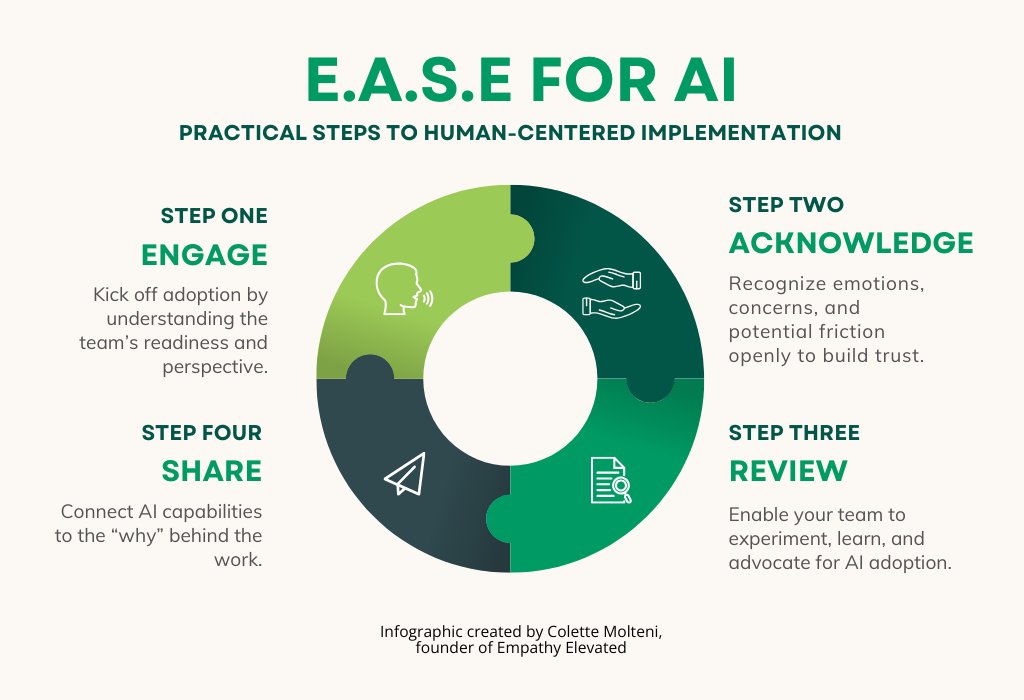

The E.A.S.E. for AI Framework: a four-step process for human-centered adoption

How to measure success beyond analytics and adoption rates

If you’re planning AI implementations for 2026, read this before you buy another tool.

Here is Colette…

“The AI xyz tool will fix that!”

Have you heard that? Where is the data? What specific area of the problem does it address?

I’ve heard it too many times.

“We had this problem, and then we implemented this new AI tool.”

But was the outcome?

Could we step back and proactively leverage the power of an AI tool to get specific, drill down to the root cause, and empathize with our end users who will ultimately use it for a beneficial outcome?

It starts with closing the gap, the empathy gap, that is.

The Empathy Gap in AI Adoption

What is the empathy gap? Simply put, it is a misalignment between AI adoption and end users’ experience.

In one revealing example from the University of Queensland Business School’s research, an organization launched a machine learning project to predict home loan defaults, yet never brought the existing actuarial team into the conversation. The actuaries felt overlooked, the IT department was sidelined, and ultimately, the tool, even though technically sound, failed because those who mattered weren’t engaged. This wasn’t a data problem. It was a human one.

The team who were supposed to trust, use, and sustain the system felt it was imposed on them, not built with them. If we implement AI without first listening to users, without asking how the change will affect their identity, sense of purpose, or workflow, we create the very gap that good technology means to bridge.

Introducing the E.A.S.E. for AI Framework

MIT research recently revealed that only 5% of corporate AI pilots are actually delivering real business value, while the other 95% show no measurable impact on the bottom line. For leaders, this isn’t just a number to see flash across your screen, but rather a warning that most AI initiatives fail because they overlook the people and processes they’re supposed to help.

Let’s talk about the E.A.S.E. for AI framework that you can leverage, regardless of your role, in your following AI implementation.

E: Engage

Kick off adoption by understanding readiness and perspective.

15-minute AI Readiness Huddles with 4 quick questions on comfort, benefits, barriers, and support needs.

Collect pulse data via short surveys to spot trends or friction points.

Example: Weekly huddles at a nonprofit revealed duplicated workflows, prompting pre-rollout adjustments.

A: Acknowledge

Recognize emotions and potential friction openly.

1-minute Emotional Audit in launch meetings: the team shares one word for how they feel.

Publicly naming likely loss points to normalize concerns.

Example: Tech team openly discussed fears of AI replacing work, reducing resistance.

S: Share

Connect AI capabilities to mission outcomes.

Publish a 1-page “Human Why” brief linking the tool to tangible team or stakeholder benefits.

Include visuals such as workflow diagrams and before-and-after comparisons.

Example: Hospital AI triage brief showed reduced wait times and freed nurses for high-value care.

E: Empower

Enable experimentation, learning, and advocacy.

Short learning sprints and rotating AI Ambassador roles.

Track recognition metrics to celebrate mastery and small wins.

Example: Marketing agency ambassadors ran a lunch-and-learn, boosting adoption by 40% in two months.

Applying & Measuring E.A.S.E. in Your Organization

I’ve seen this methodology unofficially leveraged in a recent AI deployment with a team I work closely with. It inspired me to use it fully in a current AI implementation I am undertaking with my immediate team.

The team I saw leverage this engaged early with their stakeholders. They listened and asked open-ended questions to get to the root of their challenges. They did not assume; they empathized. My team is currently at this stage, figuring out barriers and gathering data points.

The team I witnessed addressed any potential “loss” up front. In this case, it was the reduction in time a leader would need to listen to a call recording all the way through, and instead be able to see the highlights of sentiment in their direct reports’ engagement. Not really a loss at all.

The team did not create a “human why” document directly, but did explain the human impact of being able to reflect and receive immediate feedback from customer interactions. As a pillar of my team’s implementation, we will hone in on AI and Human partnership to enable more time for creative and interactive endeavors.

The team empowered users immediately by providing ample training and office hours. Training has now continued with weekly huddles for additional mini implementations with the AI-driven tool and others. I am not even in that department, and I have started joining the calls!

Success has been measured by the use of the tool and the analytics provided directly, and by anecdotal feedback streaming in. Even more exciting? The implementation was so successful that another department is looking to replicate it!

Bridging the Empathy Gap, One AI Implementation at a Time

The gap between tech capability and human adoption isn’t just a stat; it’s the difference between the AI implementations that drive value and ultimately work those that flail. But this isn’t a technology problem; it’s a human one. And humans, unlike algorithms, don’t just need optimization; they need connection.

Let’s be brutally honest: AI tools don’t “fix” anything if they’re deployed like parachute drops into hostile territory. The E.A.S.E. for AI framework isn’t just another obnoxious acronym (who needs more?!), it’s your survival guide for making AI implementations stick when everything else has failed.

I’ve seen teams transform from AI skeptics to evangelists not because the technology improved, but because the implementation approach honored their expertise rather than replaced it. The teams that thrive don’t just deploy AI; they deploy empathy as the infrastructure that supports it.

The question isn’t whether your organization needs AI; that ship has sailed. It is whether you will be in the 5% who succeed or the 95% who fail? The difference lies not in which AI tool you choose, but in how deeply you engage the humans who’ll bring it to life. Start with E.A.S.E. for the AI framework, and end with excellence. Close the empathy gap.

New subscribers to Empathy Elevated will receive the E.A.S.E. for AI Implementation Cheat Sheet in their welcome email — your pocket guide to human-centered AI adoption that actually works.

Thank you, Colette Molteni!

Remember, AI tools don’t fix anything if they’re deployed like parachute drops into hostile territory.

As you plan for 2026, ask yourself this:

are you buying AI tools to solve problems, or are you listening to your people first to understand what problems actually need solving?

The teams that thrive don’t just deploy AI. They deploy empathy as the infrastructure that supports it.

If You Only Remember This:

Only 5% of corporate AI pilots deliver real business value because 95% overlook the people and processes they’re supposed to help

The E.A.S.E. Framework (Engage, Acknowledge, Share, Empower) bridges the gap between tech capability and human adoption

AI implementations succeed when they honor expertise rather than replace it

Humans, unlike algorithms, don’t just need optimization. They need connection.

One question as we head into 2026: What’s one AI tool you’re planning to implement next year, and have you talked to the people who’ll actually use it?

Drop your answer in the comments.

Brought to you by COZORA👇, learn AI live!

During December, All Subscribers get 10% with code CHRISTMAS25.

Premium subscribers get $360 off (50% per year) through the coupon in the Premium Hub.

Partner and Connect

I love connecting with people. Please use the following connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

Many AI discussions stop at AI + Human. That was the dominant narrative in 2025. "AI is an assistant" that complements human capabilities. In practice, this usually means companies buying Copilot seats and giving everyone a short training delivered by a Big4 consultant.

The real shift in 2026 starts with AI × Human. This is not addition, it is multiplication. Roles change. Decision rights move. Human judgment becomes the scarce asset. The system behaves differently, not just faster.

Most AI programs fail because leaders design for AI + Human, while the organization experiences AI × Human effects. That gap creates fear, resistance, and silent disengagement.

Adoption is not a training issue. It is a system design issue.

Most leaders confuse 'Empathy' with 'Sentimentality.' They are wrong.

In the context of AI implementation, Empathy is an engineering constraint. It is the understanding of your layer-1 infrastructure: The Humans (Wetware).

If you deploy high-velocity silicon (AI) onto low-trust wetware without an interface strategy, the system rejects the transplant. The 'Gap' you describe isn't an emotional failure; it is an architectural failure.

You cannot upgrade the code if you despise the compiler.

What we need to do is fix the interface and then upgrade the code.