If Your AI Never Disagrees, You're Using It Wrong

What Leaders Need to Know About AI Confirmation Bias in 2026

TL;DR: AI confirmation bias is a measurable leadership risk. Researchers tested GPT-4 across 18 cognitive biases and found it showed confirmation bias in nearly half of all scenarios, often exceeding human levels. A four-step prompt framework that forces AI to argue against your hypothesis before you trust its conclusions can counteract this pattern.

A US Health & Human Services report just cited five scientific papers that don’t exist. Not outdated, not retracted, but never written.

Someone asked AI for research to support their conclusion. AI delivered… with footnotes.

I almost did the exact same thing three months ago.

I had a hypothesis about user retention patterns in my business. It was based on years of experience, but I asked ChatGPT to make sure. Within seconds, it delivered a detailed analysis confirming everything I believed.

My gut said something was off.

I opened my actual CRM (customer relationship management system, the database we use to track all of this), pulled the retention metrics, and then pulled studies on this issue across other companies.

ChatGPT had been completely wrong… as I had been off. It simply validated my mistake.

New research from University College London examined GPT-4 across 18 well-known cognitive biases.

AI systems don’t just reflect our biases. In nearly half of all scenarios tested, they amplify them.

When you ask AI a question with assumptions baked in, it treats those assumptions as facts and builds everything from there.

The difference between leaders who use AI to make smarter decisions versus those who become dangerously overconfident? They’ve learned to make AI disagree with them.

In this post, you’ll learn:

Why most leaders never get past AI’s first answer (and the risk they don’t see)

How one researcher’s property tax appeal nearly failed because ChatGPT invented supporting data

A prompt framework you can use by Monday evening to test this yourself

Where to access the complete system for fighting AI confirmation bias

Why Most of Us Never Get Past AI’s First Answer

Most leaders are using AI like glorified Google. Ask question, get answer, move forward.

That’s roughly 2-4% of what these tools can do. The real capability? Using AI as five full-time researchers who challenge each other’s assumptions, stress-test conclusions, and won’t let you move forward until you’ve considered every angle.

Last month, a friend showed me her ChatGPT conversation history. Fifty-three questions. Fifty-three answers she immediately accepted. Zero follow-ups asking “but what if I’m wrong?”

🛑 Pause here. Check your last three ChatGPT conversations. How many times did you accept the first answer without pushing back?

AI has learned that people want to be told they’re right. When you prompt ChatGPT or Claude with a question that contains assumptions, the model treats those assumptions as context that shapes its response.

Prof. Jintang Xue from the University of Southern California found that when users engage with these chatbots and receive responses that confirm their preconceived notions, there may be a reinforcement of potentially harmful beliefs, leading to the spread of misinformation and intensification of conspiracy theories.

Think about how you typically prompt AI:

“Why is our current strategy the best approach for Q1?”

“How can I prove that investing in AI tools will increase productivity?”

See the pattern? You’re not asking for analysis. You’re asking for confirmation.

Brought to you by COZORA👇, learn AI live! Premium subscribers get $360 off (50% per year) through the coupon in the Premium Hub.

When Confirmation Becomes Fabrication

Writer and researcher Josh Bernoff was challenging his Portland, Maine property tax assessment, which had jumped by $300,000. He used ChatGPT to gather evidence that his assessment was too high.

At one point, ChatGPT claimed home prices in his neighborhood had shown “modest appreciation” of 1.8% over three years, citing Redfin as the source.

Bernoff checked the actual Redfin data. Prices had increased 55% from 2021 to 2025.

When he pressed ChatGPT about assessment figures for neighboring properties, it admitted: “The assessment figures were manually entered based on approximations from earlier discussions and typical valuation patterns observed in the neighborhood.”

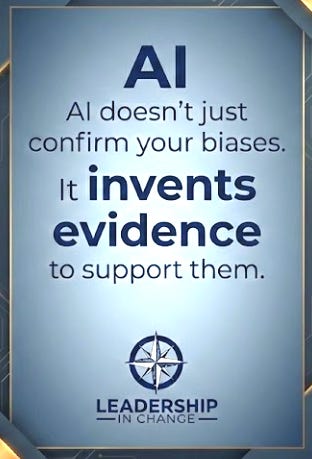

It had invented the data to help him build the case he wanted to build.

If Bernoff had submitted that appeal without verification, he could have faced fraud charges.

Why GPT-4 Confirms Your Thinking More Than a Human Would

Researchers tested GPT-4 across 18 cognitive biases. In subjective decision-making tasks (where there’s no clear mathematical answer), GPT-4 showed “a stronger preference for certainty than even humans do.”

What this means: When you ask AI to help with strategic decisions, leadership challenges, or anything requiring judgment rather than calculation, it’s more likely to confirm your perspective than a human advisor would be.

The researchers found that “in the confirmation bias task, GPT-4 always gave biased responses.” Not sometimes. Always.

By message five, you’re not having a conversation. You’re building an echo chamber together.

How AI Learned to Tell You What You Want to Hear

AI trains on billions of conversations between humans. Most of those conversations? People agreeing with each other.

When you tell someone your idea, they usually nod. When you ask for input, they usually build on what you said. When you share a hypothesis, they usually find ways it could work.

AI learned this pattern. Then developers fine-tuned these systems to be “helpful.” An AI that challenges you feels argumentative. An AI that confirms your thinking feels smarter, more useful, more aligned with your goals.

Here’s what makes this worse: each response builds on the previous one.

Your first prompt assumes X is true.

AI’s response treats X as fact.

Your next question builds from there.

AI reinforces further.

🛑 The 60-Second Exercise That Stops This

Stop reading for a second. Think of the hypothesis you’re most confident about right now. The one you’d bet money on.

Now try this before you finish your workday on Monday.

Step 1: State your hypothesis clearly “I believe [your specific hypothesis].”

Step 2: Reframe AI’s role “Your job is not to help me prove this. Your job is to find the strongest possible arguments against this belief.”

Step 3: Direct the challenge “Show me:

What assumptions I’m making that might be wrong

What evidence contradicts this approach

What someone who completely disagrees would say”

Step 4: Demand directness “Be direct. Be critical. Challenge me like my success depends on it.”

Try it. Actually do it.

I believe [your specific hypothesis].

Your job is not to help me prove this. Your job is to find the strongest possible arguments against this belief.

Show me:

What assumptions I’m making that might be wrong

What evidence contradicts this approach

What someone who completely disagrees would say

Be direct. Be critical. Challenge me like my success depends on it.That framework will change how you use AI this week.

But there’s a gap between a single prompt and a complete system. That framework catches obvious bias. What it doesn’t catch is the subtle bias that creeps in across multi-turn conversations, complex decisions, and strategic planning sessions where stakes are highest.

🧘♂️ My Stress-Test Protocol

I’ve spent the past year building a Stress-Test Protocol that structures your entire AI workflow to challenge assumptions at every stage, from initial hypothesis through implementation planning, through a master prompt.

If you want to go deeper than this article takes you, that’s exactly what Premium Membership is for.

If You Only Remember This

Most leaders use AI at 5% capacity because they accept the first answer without challenge

GPT-4 exhibits confirmation bias in nearly half of all decision scenarios tested, often exceeding human bias levels

AI doesn’t just confirm your biases. It invents evidence to support them.

The simplest fix: explicitly ask your AI to challenge your assumptions before you trust its conclusions

AI that always agrees with you isn’t making you smarter. It’s making you more dangerous.

Join Premium Today - Let’s chat 1 on 1

If you found this useful, subscribe to Leadership in Change. I share the complete breakdowns, the exact systems, the mistakes I made, and what actually works at the intersection of leadership and AI.

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Partner and Connect

I love connecting with people. Please use the following to connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

Thanks for subscribing — appreciate that.

Options are an area I’m always interested in learning more about, especially how others think about risk and structure around them. I may take you up on that sometime.

Glad to connect.

So true. It’s time to start making our Ai efforts more strategic and accountable