We Tested 8 AI Humanizers for 20 Hours...Most Failed, So We Built Our Own

What 20 hours of testing revealed about the gap between AI detection and human readability

Thanks for reading! Join leaders worldwide who are future-proofing their leadership with Premium access to exclusive tools, direct guidance, and our leadership community. Discover what you're missing here with a free trial (the next 50 subs get 50% off for life).

Disclaimer: This article is based on independent testing of a selection of AI text “humanization” tools representing different categories, price points, and technical approaches. Tool names have been anonymized and no verbatim outputs are shown. Results reflect our experiences under the specific test conditions described and may vary for other users, use cases, or tool settings.

Any examples, descriptions, or performance assessments are provided for informational purposes only and should not be taken as endorsements or rejections of any specific product. We do not encourage or condone using AI or AI-modified text in violation of academic, professional, or contractual requirements.

Most content creators we know spend almost as much time editing AI-generated drafts as they do writing from scratch.

The frustration is real. You get a piece back from ChatGPT or Claude that sounds robotic, stuffed with phrases like “delve into” and riddled with those telltale em-dashes. You edit it once, twice, sometimes three times, and it still doesn't sound like you wrote it.

So when AI humanization tools started popping up everywhere, promising to solve this exact problem, we got curious. Rather than just try them randomly, we decided to put them through a systematic test.

I partnered with Karen Spinner, creator of Wondering About AI, for this research. Karen brings serious research chops to AI content analysis, and together we decided to answer the question that's been bugging creators everywhere:

Do these humanization tools actually work?

After 20 hours of testing, the answer surprised us both. Most did not meet our expectations. So we decided to build our own… more on that later (plus your free access link!)

73% of business leaders report spending more time editing AI content than expected, according to Salesforce's 2024 AI Research.

We wanted to find out if the tools promising to fix this were worth the time and money creators are spending on them. But before we start, check out Karen Spinner’s work!

Why We Decided to Test This Systematically

Since ChatGPT first launched in 2022, writers have been editing AI-generated copy and experimenting with different strategies for making its outputs sound more human. Over time, three main approaches have emerged:

1. Iterative prompting with your favorite LLM. This is just like it sounds. You feed your LLM (Large Language Model, think ChatGPT, Claude, or similar AI writing tools) the copy you'd like humanized plus a detailed prompt. Then you iterate through conversations until you get a draft that sounds more like you wrote it naturally.

2. A GPT or Claude project with a style guide and/or human writing samples. Creating a GPT (a custom version of ChatGPT) or Claude project can save you time by giving the LLM a knowledge base to draw upon. In theory, if you share a draft with a little context, your LLM will know what to do. Again, some iteration will be required, but the setup makes the process faster.

3. Commercial humanizers. Humanizer software services incorporate AI into structured automations designed to paraphrase AI-generated text, sometimes enabling it to avoid detection by tools like Turnitin and GPTZero. Often, they rely on smaller Natural Language Processing (NLP) models tuned for a single purpose rather than a more broadly focused LLM.

Given these three distinct approaches, we wanted to test representatives from each category to see which actually delivered on their promises.

Note from Karen: I’ve also seen brands use vector databases to provide AI with a vast number of writing samples. We didn’t test this approach due to the complexity involved.

Understanding the Challenge: What Makes Humanizing AI Copy Complex

Before we could design our test, we needed to understand some key technical considerations that affect how these tools work.

The Non-Deterministic Problem: LLMs are non-deterministic, which means they almost never give you exactly the same result twice, even with identical inputs. This means that you can run a tool once and get a good result, then run it again with the same content and get a slightly different result. This is why LLMs and GPTs typically require one or two rounds of iteration to fully humanize a draft.

Commercial vs. LLM-Based Tools: Commercial humanizers, however, which offer rules-based automation powered by NLPs, are more deterministic than interactive chatbots. While you may see a very small amount of variance between responses, they are much more consistent, which may be good or bad, depending on the quality of the initial response.

Understanding this difference was necessary to design a fair test that accounted for the inherent variability in different types of tools.

What Does Successful Humanization Actually Look Like?

To evaluate these tools fairly, we first needed to define what "success" means. Most writers expect humanization tools to accomplish at least two of the following three objectives:

Remove AI writing patterns: AI models learn from the same massive datasets of online content, which include blogs, marketing sites, press releases, and social media posts. Because they work by predicting the next most likely word based on patterns they've seen, virtually every model has the same default way of writing, which is grounded in the most commonly used phrasing, structures, and clichés. These patterns include overuse of em-dashes, phrases like "delve into" or "leverage," and overly formal sentence structures.

A humanizing tool should, first and foremost, remove these AI writing patterns from copy drafts.

Maintain overall writing quality: In addition to removing AI writing patterns, a humanizer should not dramatically change the overall writing style (assuming it was acceptable except for the AI style quirks) or actually make the copy less readable. It should offer line edits rather than complete restructuring, preserving the original meaning and flow while making it sound more natural.

Bypass commercial AI detectors: A subset of writers look for humanizing tools that make their copy appear “human-written” when analyzed by AI detectors such as GPTZero or Turnitin. In some cases, this may include individuals attempting to submit AI-generated work in contexts where such use is prohibited, something we do not recommend.

While these applications occupy an ethical gray zone, and both zero-AI policies and the use of imperfect detection tools are debated, this capability continues to drive demand for humanizing tools. We included it in our evaluation solely to provide a complete picture of performance across common user priorities.

One more time with feeling: We do not endorse using such tools in ways that violate academic, professional, or contractual rules.

Designing Our Test

A Fair Comparison Framework

To get a better handle on how well AI humanizers work, we designed a test that looks at how copy performs for human readers and its success at evading AI detection. Here's how we structured our approach:

Sample Size and Consistency: We ran a typical AI-generated copy draft through each humanizer at least once. We gave commercial humanizers, which are more deterministic, one shot each. Prompt strategies with LLMs and LLM-powered GPTs, which are less deterministic, received two chances so we could account for variability.

Scoring Framework: Each humanized draft received these scores:

The number of AI writing patterns still remaining (Best score = 0)

A subjective human-writing score from us (Best score = 100)

A subjective writing quality score from us (Best score = 100)

A calculated human-writing score from a popular AI detector that allows free access (Best score = 100)

For prompt strategies and GPTs, we scored the best of two outputs to account for the non-deterministic nature of these tools.

Choosing the Right Tools to Test

We wanted to test all three strategies for humanizing AI-generated text comprehensively. This meant developing our own prompting strategies, selecting popular commercial options, and testing the most highly-rated custom solutions.

Our Testing Arsenal:

Four distinct prompting approaches to use with Claude 4 Sonnet

Two popular offerings from the GPT store (powered by OpenAI models)

Two commercial humanizers representing different price points and approaches

This selection gave us a representative sample across all three major categories of humanization tools.

Creating Our Test Content: The Perfect AI Writing Sample

We asked ChatGPT to write a 250-word introduction to a blog post on teaching a puppy to walk on a leash. We kept the prompt high-level, asking only for a third-person POV, specifically to encourage the production of a draft full of em-dashes and AI style quirks.

We weren't disappointed. The result was a textbook example of AI writing patterns:

Bringing home a new puppy is an exciting milestone, but it also comes with a steep learning curve—for both the puppy and the owner. One of the first big challenges is leash training. While it might seem like a natural behavior, walking politely on leash is actually a skill that must be taught with care and consistency. Puppies are curious, easily distracted, and often unsure of what’s expected of them. Without training, even a short walk can turn into a frustrating tug-of-war.

Teaching a puppy to walk on leash is about more than obedience—it’s about building trust, setting boundaries, and establishing a routine that helps the dog feel safe and confident. Early leash training lays the groundwork for a lifetime of positive experiences in the world beyond the backyard. Whether the goal is a quiet stroll around the block or an adventurous hike, every dog needs to learn how to walk calmly beside their person.

In this post, we’ll cover the basics of leash training a puppy, from choosing the right gear to setting realistic expectations for early outings. We’ll walk through common stumbling blocks—like pulling, sitting down mid-walk, or getting distracted by every leaf—and offer practical tips for overcoming them. With patience and positive reinforcement, even the most energetic pup can learn to walk on a loose leash.

The early days of training require time and repetition, but the payoff is well worth it. A well-trained puppy becomes a confident walker—and a more enjoyable companion for all those future walks.

This sample contained multiple em-dashes, formal phrasing, predictable structure, and several classic AI vocabulary choices. When we ran it through the commercial AI detector, we got the expected result: "We are highly confident this was AI-generated."

This gave us the perfect baseline to test how well each humanization approach could transform obviously artificial content into something that sounds naturally human-written.

Initial Findings: What We Discovered Right Away

After testing various prompting strategies with Claude, custom GPTs, and commercial humanizer tools, we discovered several key insights that surprised us:

AI detectors can be bypassed, but results vary dramatically. Because AI outputs are non-deterministic, your results may vary significantly, especially with GPTs and prompting strategies. If bypassing AI detection is a priority, first ask yourself if what you’re planning is truly legal and ethical. Then, if you must, consider commercial tools that offer money-back guarantees, or subscribe to the specific AI detector you need to pass and test your copy before submission.

Passing AI detection ≠ quality writing. This was perhaps our most important finding. In our testing, the ability to register as “human-written” in an AI detection scan did not necessarily align with high-quality writing. One sample that received zero AI detection flags, for example, had a formal and somewhat inaccessible style that, in our view, might not engage many readers. By contrast, we felt the prompting strategies produced the most readable and engaging outputs for human audiences in our specific test conditions.

Simple can be better: Including a human writing sample helps LLMs perform slightly better, but shorter prompts may perform just as well as longer, more detailed prompts. This contradicted our initial hypothesis that more detailed instructions would produce better results.

Of course, as writers ourselves, we believe you should strive to write copy that sounds good to human readers, not detection bots, and be transparent about how you use AI in your writing process.

Deep Dive: The Prompting Strategies That Actually Work

We developed four distinct prompting approaches to test with Claude 4 Sonnet, ranging from simple to complex, and with and without writing samples. Here's what we discovered:

1. The Short Prompt Approach

We started with the simplest possible approach:

Edit this copy so it sounds like a human wrote it, not an LLM. This means no em-dashes, no "it's not this, it's that" constructions, no questions-as-transitions ("The soda? It is fishy."), no "here's the thing"/"here's the kicker"/"the thing is." Avoid these words and phrases: landscape, dive into, delve into, dig into, explore, and crucial.Short prompt humanized result:

Bringing home a new puppy is a joyful step forward, but it also kicks off a stretch of trial and error—for both the dog and the person holding the leash. One of the first real hurdles is leash training. It doesn’t come naturally. Walking calmly beside you is a learned behavior, not an instinct. Puppies are full of curiosity, easily distracted, and still figuring out what you expect from them. Without some guidance, a walk can quickly turn into a stressful, stop-and-go struggle.

Leash training isn’t just about following commands. It’s about building a rhythm with your dog, setting healthy limits, and creating a routine that helps them feel grounded and secure. Those first few lessons matter. They help shape the way your dog relates to the world outside your home—whether it’s a slow stroll through the neighborhood or a hike on a wooded trail. Every dog needs to learn how to walk with you, not against you.

This guide breaks down the basics. We’ll go over how to choose the right gear, what to expect during those early walks, and how to deal with common hiccups—like pulling, stopping in the middle of the sidewalk, or getting distracted by every falling leaf. You’ll get simple tips that actually work and make daily walks something you both look forward to.

It takes time and repetition, especially in the early days. But that effort builds something worthwhile. A dog who walks well on a leash is more than just well-behaved—they’re more confident, more connected to you, and a whole lot more fun to bring along.

Results Analysis: In our judgement, the short prompt worked to make the copy a bit more readable, but still left multiple AI writing tells, such as em-dashes and “it’s not just this, it’s that” constructions. However, while it was clear to us that this passage had been generated by AI, it did earn a 100% human score from the AI detector.

2. The Detailed Instruction Approach

Next, we tried a much more comprehensive approach:

Edit this copy to sound authentically human-written, not AI-generated. Apply these specific transformations:

Remove AI vocabulary patterns:

Replace: delve/dive/dig into, explore, utilize, leverage, harness, unlock, unravel, foster, elevate, comprehensive, robust, pivotal, multifaceted, nuanced, transformative, landscape, tapestry, symphony, crucial

With: simpler, everyday alternatives (use, check out, look at, important, etc.)

Eliminate formal AI sentence starters:

Remove: "In today's rapidly evolving," "It's important to note," "When it comes to," "That being said," "One should consider"

Replace with natural conversation starters or just start directly

Simplify transitions:

Change: Therefore, however, nevertheless, furthermore, moreover, subsequently

To: So, but, still, also, then, next

Avoid these specific constructions:

No em-dashes (—)

No "it's not this, it's that" patterns

No questions-as-transitions ("The problem? It's complex.")

No overused phrases: "here's the thing," "here's the kicker," "the thing is"

Add human naturalness:

Use contractions (don't, can't, won't, it's, you're)

Vary sentence lengths naturally

Include some sentence fragments for emphasis

Make it sound conversational, like explaining to a friend

Remove overly perfect grammar where casual tone fits better

Keep the core message and information intact while making it sound like a real person wrote it naturally.Long prompt humanized result:

Bringing home a new puppy is a big deal. It’s exciting, full of firsts, and—let’s be honest—a little overwhelming. One of the first real hurdles? Leash training. It might seem simple, but walking nicely on a leash is something puppies have to learn. They’re curious, easily distracted, and usually have no idea what’s expected of them. Without a little help, even a quick walk can feel like a tug-of-war.

But leash training isn’t just about obedience. It’s about building trust, creating clear boundaries, and setting up a routine that helps your puppy feel safe and confident out in the world. Those early walks lay the foundation for all the adventures to come—whether it’s a lap around the block or a full-on weekend hike.

This guide covers the basics: picking the right leash and collar, knowing what to expect in those first outings, and handling the common stuff that can trip people up. Like when your puppy suddenly plants their butt mid-walk. Or chases every leaf. Or pulls like they’re auditioning for a sled team. We’ll walk through all of it, step by step, and share tips that actually work.

It takes time and repetition, no way around that. But the payoff is worth it. A well-trained pup becomes a confident walker—and makes every future stroll a whole lot more enjoyable for both of you.

Results Analysis: Interestingly, the more detailed instructions didn't necessarily produce better results. While it reduced AI patterns to 5 (compared to 6 for the short prompt), it ironically underperformed with the AI detector (only 2% human score) despite sounding more natural to us. This taught us that more instructions can sometimes overcomplicate the process.

Adding a Human Voice: The Writing Sample Experiment

We also tested both approaches with a human writing sample to see if providing a style reference would improve results. We used this sample:

I enjoy taking long walks on the beach in the mornings! It is a fun way for me to unplug from tech, notifications, and all the chores calling my name at home and just focus on nature and a good audiobook. Well, I'll admit that I do check my phone a few times because it is there, in my pocket, available, while playing my audiobook! I know I shouldn't and I try not to, but I sometimes let it disrupt my peaceful walk. Regardless, those beach walks are definitely something that allows me to launch into my busy day refreshed, focused, and open-minded.

3. Short prompt with sample humanized result:

Bringing home a new puppy is one of those unforgettable moments—equal parts joy, chaos, and “what now?” Everything is new, exciting, and just a little overwhelming, especially when it comes to walks. Most folks figure a puppy will just follow along. But the truth is, walking on a leash is a skill. And like anything new, it takes practice.

Puppies are curious. They zigzag, stop to sniff every leaf, and sometimes sit down and refuse to move at all. Without some gentle training, what should be a nice walk can quickly turn into a messy back-and-forth with both sides getting frustrated.

But leash training is about more than getting from point A to point B. It’s about building trust and teaching your dog what to expect from the world outside your home. These early walks help your pup feel safe, teach them what’s okay and what’s not, and create a rhythm they’ll carry into adulthood.

You don’t need to be perfect. You just need to be patient. Choose the right gear, keep your expectations realistic, and go slow. Some days will feel like progress. Others, not so much. That’s normal.

In this post, you’ll get simple tips that work: how to handle pulling, what to do when your pup sits down mid-walk, and how to stay calm when they get distracted for the fiftieth time. The good news? Every pup can learn to walk well—with enough time and a pocket full of treats.

Stick with it. These early steps may take effort, but they pay off. A confident walker makes for a more relaxed, connected, and enjoyable companion—on every walk to come.

Results Analysis: The writing sample definitely helped. This approach produced our highest overall writing quality score (85%) while maintaining excellent AI detection performance (94% human). The voice felt more authentic and personal, demonstrating that giving AI a style reference significantly improves output quality.

4. Long prompt with sample humanized result:

Bringing home a new puppy is a big, joyful moment. But let’s be honest—it also comes with a ton of new challenges. One of the first? Leash training. It might seem like something dogs should just know how to do, but walking calmly on a leash is actually something they have to learn. And for puppies, everything is new and exciting. Leaves blowing across the sidewalk. That weird crack in the pavement. A distant squirrel. It doesn’t take much to throw them off course.

Teaching your pup to walk nicely beside you isn’t just about getting them to behave. It’s about helping them feel safe, building trust, and setting a rhythm you can both count on. Those early walks lay the foundation for all the adventures to come—whether you’re heading around the block or hiking through the woods someday.

In this post, we’ll cover the basics of getting started: what gear you’ll need, how to set realistic expectations, and what to do when your puppy decides to sit down mid-walk and absolutely refuse to budge. We’ll also look at common stuff like pulling or getting distracted by, well, everything—and share some practical ways to handle it.

The first few weeks take time, repetition, and lots of patience. But it’s worth it. Once your puppy figures it out, walks become easier, calmer, and a whole lot more fun for both of you.

Results Analysis: Again, the more detailed instructions didn't necessarily produce better results. We again received a “highly confident” this is AI result (only 2% human score) from the AI detector. This taught us that more instructions can sometimes overcomplicate the process.

Key Takeaway from Prompting Tests: The prompting strategies, while they did not succeed in removing all AI-writing artifacts, did produce what we believe is the highest quality writing out of all the humanized samples. Most importantly, they showed that simple approaches often work better than complex ones, and that providing a human writing sample significantly improves results.

Our Verdict: If quality output is your goal, we suggest iterative prompting with your favorite LLM, preferably with a writing sample, followed by a thorough human edit.

Testing the Popular GPT Solutions

Next, we moved on to testing custom GPTs from OpenAI's marketplace. We picked two popular ones, each with thousands or millions of chats completed.

5. Humanizer GPT A

We had high hopes for this GPT. It has a solidly good (better than 4 out of 5) rating and millions of conversations under its belt. But, in our opinion, its performance overall was underwhelming.

Technical Issues: First, it requires logging into another site, so the tool can access what is likely a fine-tuned model. This wouldn't be a problem, except the connection to the tool was very unreliable during our testing. For us, it failed more often than it worked, which in our opinion could raise concerns about practical usability for creators on deadlines.

Quality Issues: When it did run, it didn't remove all of the characteristic AI writing patterns while adding errors and awkward phrasing that made the overall writing quality worse. The output contained grammatical errors like "gentle, consistencies" that no human editor would overlook.

AI Detection Performance: It also did not completely bypass the AI detector, which flagged the humanized copy as 51% AI and possibly "including paraphrasing."

Our Verdict: This GPT was not an improvement over prompting an LLM to humanize your copy.

6. Humanizer GPT B

The second GPT we tested offered a similar experience with comparable results, but with one major exception.

Quality vs. Detection Trade-off: Like the first GPT, the humanized copy still contained multiple signs of AI writing as well as very awkward passages like "throughout your dog's existence" that, in our opinion, no serious human editor would let pass. And yet, despite all this, it received a 100% human score from the AI detector.

This highlighted an important insight: bypassing AI detectors doesn't equal quality human writing.

Commercial Humanizer Tools: The Promise vs. Reality

Finally, we tested commercial humanization services. We selected two commonly used humanizers representing different price points: a free tool and a premium offering.

7. Free Humanizer: Minimal Impact in Our Tests

In our testing, the free humanizer we evaluated made only subtle edits. At first glance, it was difficult to distinguish the humanized version from the original.

Minimal Changes: The tool applied what we considered cosmetic adjustments, such as replacing “exciting milestone” with “exciting adventure” and adding casual phrases like “trust me” at the end. In our view, most AI-style patterns remained, and the overall formal structure of the original text was largely unchanged.

Detection Results: When we ran the output through the AI detector used in our comparison, it registered as 100% AI-generated, suggesting that these changes were not sufficient, at least in this instance, to pass even a basic detection test.

Our Verdict: In our assessment, this tool did not significantly improve humanization or overall readability under our test conditions.

8. Premium Humanizer: Effective but With Trade-offs

One premium tool we tested offered only a limited free trial, requiring payment details to process even short text samples.

Technical Success: In our tests, this tool removed all of the AI-writing patterns we were tracking, making it the only option in our set to achieve a perfect score in that category. An AI detector we used for comparison also rated the result as largely human-written, with a 73% “human” score.

The Trade-off: The output, however, was significantly restructured. It adopted a formal, academic tone that, in our view, made it less accessible and engaging than the original. For example, natural conversational flow was replaced with phrasing such as “The arrival of a new puppy brings immense joy yet it demands significant learning.”

Our Verdict: Based on our experience, this type of tool may appeal to users focused primarily on passing AI detection checks. Those seeking more reader-friendly, conversational writing may find it less effective for their goals.

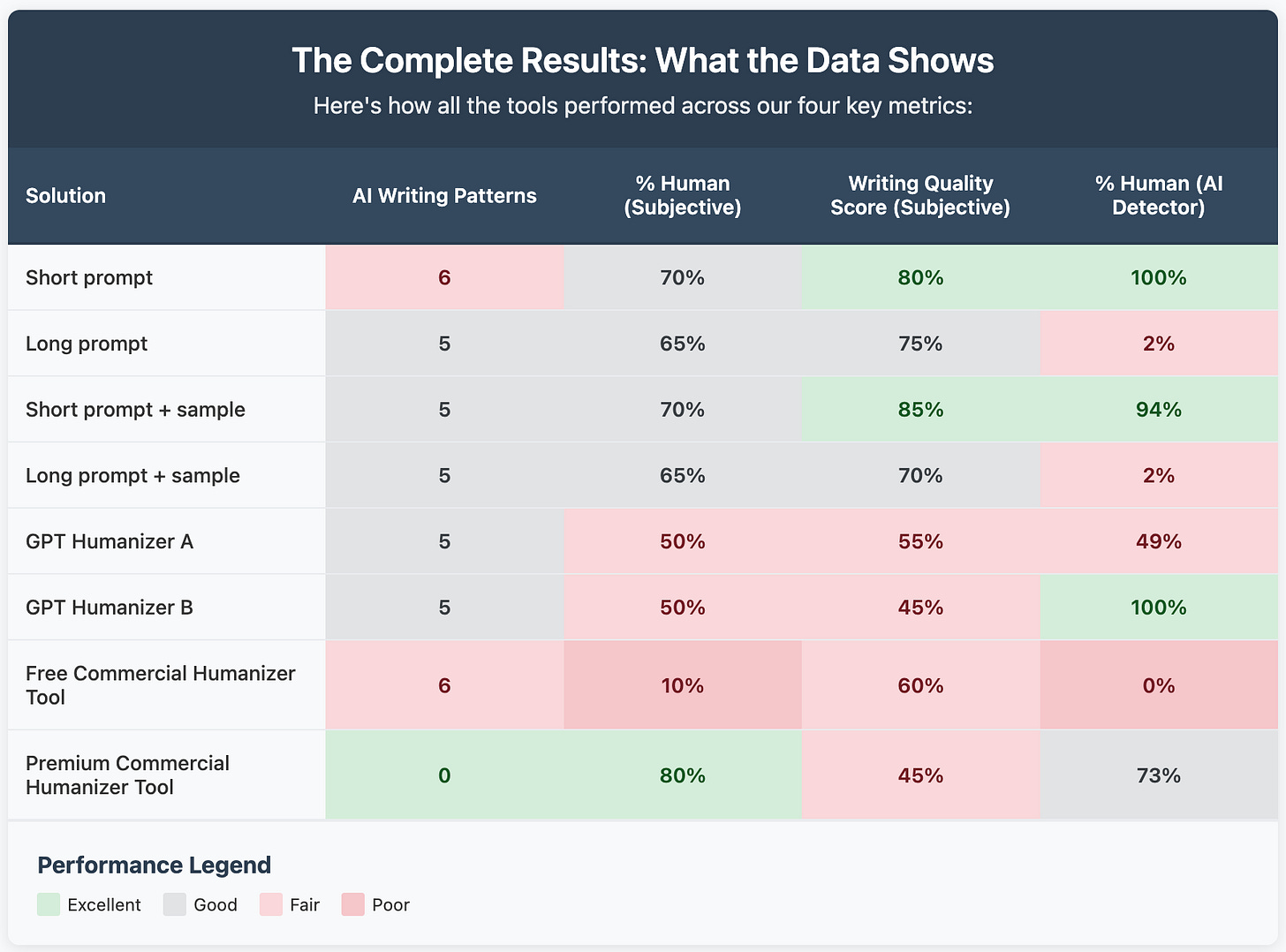

The Complete Results

Looking at this data, several clear patterns emerge that challenge common assumptions about AI humanization…

What This All Means: Three Key Discoveries That Will Change How You Think About AI Humanization

After analyzing all our results, three major insights emerged that fundamentally challenge how most people approach AI humanization:

1. AI detectors can be bypassed, but it doesn't guarantee quality writing.

This was perhaps our most important discovery. The tools that scored highest on "human detection" often produced the most awkward, formal writing that real humans wouldn't want to read.

This reveals a fundamental problem with prioritizing AI detection over human readability. The algorithms that detect AI writing don't necessarily correlate with what makes writing engaging for actual human readers.

2. Simple prompting strategies often outperform expensive tools.

This finding surprised us. Our basic short prompt with Claude consistently produced better overall writing quality than most commercial solutions, and it cost nothing beyond our existing Claude subscription.

The implications are significant: creators can often achieve better results with strategic prompting than by paying for commercial humanization services.

3. There's no magic bullet.

Every approach we tested still required human editing and judgment. Even the best-performing methods left some AI patterns intact, and several introduced new problems like grammatical errors or awkward phrasing that weren't in the original.

This means the goal should be reducing editing time, not eliminating the need for human oversight entirely. The most successful approach combines smart tool usage with skilled human editing to catch what the tools miss.

Most importantly, we realized that focusing on what AI detectors think misses the point entirely. The real question isn't "Will this fool a bot?" but "Will this connect with our human readers?"

As content creators, our priority should be writing that serves our audience, not gaming detection algorithms. The best humanization tool should make your content more engaging and authentic, not just harder for algorithms to detect.

A few notes about GPT-5

According to OpenAI’s help center, GPTs like the ones we wrote about above will still be able to use legacy models, including 4o, through mid-October. At that point, they will be migrated to GPT-5 or its thinking variant.

Because we heard from a few users that GPT-5 seems to be better at avoiding some AI writing patterns, particularly the em-dash, we tried it with the four prompting approaches described above. While the copy drafts it produced did include fewer em-dashes than those we generated with Claude, and their tone was plausibly human to our ears, the AI detector flagged all four drafts as being 100% written by AI.

The Critical Insight: Detection vs. Quality Are Different Goals

Throughout our testing, we observed a consistent pattern that reveals a fundamental flaw in how many creators approach AI humanization. Tools that excel at fooling AI detectors often produce worse content for human readers, while approaches that create genuinely engaging writing sometimes still trigger detection algorithms.

This disconnect exists because AI detectors and human readers evaluate content using completely different criteria:

AI Detectors look for:

Statistical patterns in word usage

Sentence structure consistency

Vocabulary distribution patterns

Predictable phrase combinations

Human Readers care about:

Clarity and readability

Engaging voice and tone

Logical flow and organization

Emotional connection and relevance

Understanding this difference is critical for creators who want to use AI tools effectively. If your primary goal is serving human readers (which it should be), then optimizing solely for AI detection is counterproductive.

We Built Our Own Solution: The Content Humanizer

After seeing how most existing tools fell short in different ways, we decided to build something better. We created a free Content Humanizer that combines the best insights from our 20-hour testing marathon.

Our Design Philosophy: Instead of trying to fool AI detectors or using overly complex instructions, our tool focuses on what actually matters: making your content sound like you wrote it naturally while maintaining high quality for human readers.

The Key Innovation: Based on our testing results, the most important feature we included is the ability to optionally provide a sample of your own writing, even just a few sentences. This helps the AI understand your voice and style, leading to much better humanized content that actually sounds like you.

Why It's Different: Our Content Humanizer is built on the principle that good humanization should enhance readability for real humans, not just beat detection algorithms. Every decision in our tool design prioritizes serving your actual audience over gaming automated systems.

The Bigger Picture: What This Means for Content Creation

Our research points to a fundamental shift in how creators should think about AI tools. Rather than looking for magic solutions that eliminate human involvement, the most successful approach combines AI capabilities with human creativity and judgment.

The Partnership Model: The best results come from treating AI as a collaborative partner that handles initial drafts and pattern recognition, while humans provide strategy, voice, editing, and final quality control.

If you found this research helpful, you're not alone. We regularly dive deep into AI tools and strategies for content creators, always with an emphasis on practical testing over marketing hype.

Karen Spinner’s newsletter, Wondering About AI, focuses on practical AI applications and honest tool reviews like this one. She brings rigorous analytical approaches to evaluating AI tools and separating genuine utility from marketing claims.

My work at Leadership in Change explores how creators and leaders can use AI strategically to maximize impact while maintaining authenticity. I focus on the intersection of AI tools, leadership communication, and building sustainable creative workflows.

Question for you, have you tried our Content Humanizer? If so, please let us know in the comments what you thought!

Let’s Connect

I love connecting with people. Please use the following connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Great post, and cudos to both of you, Joel and Karen. Very thorough and well explained. I agree with your partnership model. You are responsible for your own content. How you get there, I am not really worried about. It will be interesting to see how the "ethics" of all this will shake out. Thank you for taking the time to do this and post it.

Nice post, you two! Your finding that simple, iterative prompting with a writing sample often outperforms expensive tools is both counterintuitive and practical. It suggests the real skill isn't finding a magic bullet, but in crafting a better, more collaborative process with the AI.