Understanding Privacy in the Age of AI, a Deep Dive

A practical look at data, inference, and the systems watching quietly (Guest)

Before we start: Ready to implement and build with AI? Join Premium for $1,345+ in tools and systems at $39/yr. start here | Looking for 1-on-1 coaching or a private knowledge hub build? Book a free call.

I spend most of my time helping leaders use AI strategically. But there’s a conversation we don’t have enough: what happens to the data we feed these systems?

A few weeks ago, I came across Mohib Ur Rehman’s work on SK NEXUS. He writes about technology with the kind of clarity most tech writers avoid because it requires actually understanding the systems you’re explaining.

I reached out to him because privacy in the age of AI isn’t just a technical problem; to me, more and more, it’s a leadership problem.

Most leaders I talk with don’t realize how much risk they’re accepting every time they paste something into an AI tool.

Now it’s important to make a distinction here. I use AI probably 30 to 40 times per day for specific tasks, not even counting automated tasks. I’m doing so, understanding the risk, which curbs some of the things that I would do if those risks weren’t there. That is what I would like to get through with this piece. It doesn’t mean don’t use AI at all. I’m one of the biggest proponents of the benefits of AI, but you’ve got to understand its risks.

Mohib breaks this down beautifully. By the end of this piece, you’ll have a clearer understanding of:

What privacy actually means in the age of AI

How safe your data really is and where it isn’t

The precautions people should be taking, and the ones they usually don’t

The risks that are already here, and the ones slowly forming on the horizon

If you lead a team that uses AI (which is probably all of you), read this carefully.

Here is Mohib Ur Rehman.

Recently, Joel Salinas read one of my pieces on privacy.

He liked it enough to reach out and suggest a collaboration.

And here I am.

I took the offer because it gave me the perfect excuse to finally sit down and explore a topic I’ve wanted to write about for a long time - privacy, but in the world we actually live in today.

We’re living in the era where using AI blindly and being manipulated by systems that learn more about us than we realize is the norm. Look around you - Data is everywhere and collection is default.

And still…tons of folks don’t fully understand what’s happening in the background.

I decided to write this post to ground you and because you can’t protect what you don’t understand.

Let’s get into it.

The Comfort Trap of Modern Tech

Modern technology trains you to relax.

AI tools feel helpful, almost invisible. They reduce effort and remove friction. Anticipate what you need, and as a result, data slips in as a byproduct of convenience.

And this isn’t just an AI problem.

Humans are wired for convenience. We lean toward whatever feels easier and less demanding - in real life and online. Progress usually requires friction, and convenience removes it. That’s why we default to comfort, even when it works against us.

And digital systems exploit this perfectly. If something saves time, we automatically stop questioning it. We trade control for ease without realizing we’re making a trade at all.

I’ve explored this dynamic in more depth in a separate piece - if you want to go deeper on why we keep choosing the easy path, you can read it here:

With that baseline in mind, let’s get back to where we left off.

Whenever you are using AI, you must have felt that:

You don’t feel monitored when you paste text into a model.

You don’t see anything leave when an app syncs in the background.

You don’t lose functionality or performance.

So you assume nothing shady is happening.

That assumption is dangerous.

Privacy loss looks like normal usage repeated over time:

Small actions add up → Metadata piles on → Patterns emerge → A detailed profile takes shape.

The damage is delayed, and by the time people start asking questions, the system already knows them better than they know it.

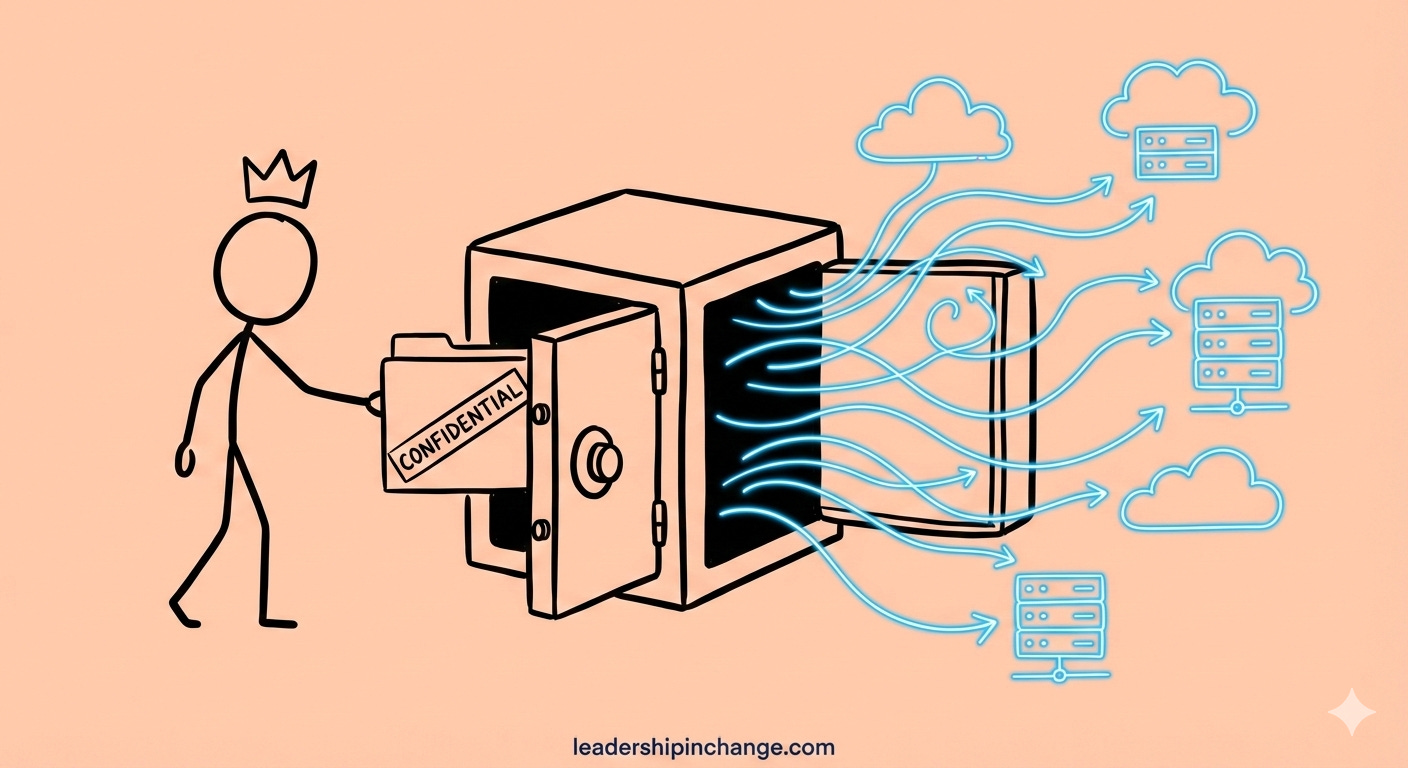

Why AI Changes What Safe Data Even Means

Traditional systems store data.

AI systems learn from it.

That distinction matters more than a lot of people realize.

In older models, data lived in databases. It could be encrypted, deleted, or access-controlled. If something went wrong, you could point to a file or a server.

But, AI changes that.

When you upload data to a normal service, it sits somewhere. When you give data to an AI system, it can become part of how that system behaves. The data gets reused and generalized.

This creates three problems people underestimate:

Data doesn’t stay isolated.

Data doesn’t fully disappear.

Once your data becomes a weight inside a neural network, there’s nothing left to decrypt. You can’t take back what a system has already learned. At best, you can limit future exposure, but there’s no changing the past.

How Data Exposure Happens in Practice

In the modern era, Data exposure starts with a simple routine.

Internal documents get pasted into chat tools for quick summaries. Strategy notes are rewritten for ‘clarity.’ HR drafts and sales emails - all run through AI assistants because it saves time. On top of that, AI is now embedded directly inside SaaS platforms you already trust, and as a result, you don’t even notice when data crosses the line.

That’s where inference comes in.

Over time, models reconstruct context using the fragments you leave behind.

So even if you never paste anything labeled confidential, the system learns what confidential looks like.

Multiple streams of small inputs quietly converge. Each one feels harmless on its own. Together, they form a detailed profile - your role inside an organization, how much authority you have, what decisions you influence, how risk-tolerant you are, even internal timelines or pressure points.

That profile doesn’t exist for your benefit.

It can be used for targeting, manipulation, or for any other malicious reason - by platforms optimizing engagement or by anyone who later gains access to those systems.

Enterprise Comfort Myths

Most organizations simply misunderstand privacy risks.

There’s a widespread belief that enterprise tools imply privacy. If a product is used by large companies, it must be safe. That’s where the confusion starts.

Security and privacy get treated as the same thing. They aren’t.

A platform can be extremely secure - hardened infrastructure, strong authentication, encrypted traffic - and still be deeply invasive by design. Google is the clearest example. It does a great job keeping attackers out. But it also collects, analyzes, and monetizes behavior at scale.

So, is it secure? Yes.

But, is it privacy-friendly? Not really.

This confusion between security and privacy is one of the biggest reasons people struggle to understand which is why I’m currently working on a separate piece on my publication that breaks this distinction down from the ground up. If you’re new to the privacy space, that article is meant to give you a solid baseline - stay tuned for it.

Then comes the compliance myth.

If an app is very convenient to use, it doesn’t mean it’s secure; it just means it’s “good enough to operate.” In reality, the tools people prefer are rarely the most restrictive ones.

Compliance is treated as a finish line when it’s really just a minimum threshold. In practice, the most compliant systems are often the most permissive because convenience wins.

Ask yourself honestly: “Which app do people use every day - the one that asks for multiple verification steps, or the one that lets them in instantly?”

You already know the answer.

Then, there’s faith in employees.

The belief that sensitive data isn’t being shared or that people will instinctively know where the line is - that’s not how things work. Most users don’t understand how AI systems work, what inference means or how small inputs accumulate. When something feels confusing or time-consuming, it gets handed to a model.

Why Deleting isn’t Deleting

Users commonly think that pressing ‘delete chat’ or clearing history means their data is erased, but this is deeply misleading.

In reality, deleting a chat often only removes it from your visible interface, not from backend systems. When you delete a conversation in most AI services, the visible history disappears, but multiple copies - backups, logs, etc. - can persist behind the scenes.

In fact, in some high-profile cases, platforms have been compelled to preserve deleted chats indefinitely due to legal orders, meaning that button is nothing more than something shiny that gets sprinkled in your eyes.

This isn’t unique to one company. Across multiple AI ecosystems, data controls are complex: opt-out toggles may stop future training use, but they don’t remove what’s already been ingested into model training or backup systems.

What Organizations Commonly Fail to Put in Place

Even when businesses know they’re using AI, many still fail to build the basics that would actually keep their data safe.

Let’s take a look at the most common mistakes organizations make:

No Defined AI-safe vs Restricted Data Categories

Most teams don’t classify what can and cannot be shared with AI systems. Without explicit categories, employees end up exposing sensitive information without realizing it. According to a Gartner survey, “It predicts that through 2026, organizations will abandon 60% of AI projects unsupported by AI-ready data.”

No Shared Definition of Safe Data.

Security teams might think everyone agrees on what counts as confidential, but that’s not the case. Vanta’s research shows that while many companies believe they have oversight of AI tools, only about a third have even a formal AI policy in place, meaning teams operate with very different assumptions about what’s okay to input into AI.

Vendor Assurances Taken at Face Value.

AI vendors commonly promise enterprise-grade security, but that isn’t the same as privacy or responsible data usage. Without solid verification, organizations may assume their vendors handle data appropriately - even when transparency about storage, retention, or training practices is absent. This lack of scrutiny amplifies third-party risk.

Limited AI Literacy at the Decision Level.

A very big unreported gap involves simple understanding - many folks don’t know when or how to use AI safely. And only a few grasp basic AI risk concepts like inference, model retention, how LLM generates output, and because of this, policies become window dressing, and risky practices become default.

How Leaders Can Reduce AI Risk

Most AI risk comes from the absence of structure. Let’s take a look at how one can reduce the risks by following some basic steps:

Define clear AI data boundaries

Organizations need explicit rules about what can and cannot be put into AI systems. There should be written categories: public, internal, sensitive, restricted and what happens if each touches an AI tool.

A lot of times, employees don’t get enough context regarding what each thing means – leaders assume, if they themselves know it, others also know it, which can backstab the entire company, later on.

NIST’s AI Risk Management Framework (AI RMF) gives a solid baseline for classifying and managing AI-related data risk.

Centralize and Monitor Approved Tools

If teams are using AI, leadership should know which ones because when every team picks its own assistant, data exits through a dozen doors. Approved tools, usage tracking, and clear escalation paths restore control without banning AI outright.

A common misconception here is that ‘centralizing tools mean blocking innovation,’ but that’s not true, it just means more visibility, logging, and policy enforcement, etc.

CISA guidance on managing third-party and SaaS risk, plus CASB-style monitoring approaches used in modern enterprises is an excellent resource for implementing this.

Question Vendor Data Retention and Training Defaults

‘Your data isn’t used for training’ should trigger follow-up questions. Leaders should demand documentation, defaults in writing, and clarity on model updates. Blindly trusting vendors is one of the major reasons for AI damages - Leaders should start asking questions like:

Is data stored?

For how long?

Is it used to train models?

Can it be audited or deleted?

When it comes to vendors, keep one thing in mind - enterprise doesn’t mean no training or no retention and if a vendor can’t answer plainly, that is the answer.

Teach AI Literacy Where Power Actually Sits

Most AI decisions today are made by non-technical leaders. If they don’t understand how inference works, how data accumulates, or how learning systems behave, no policy will ever hold. Guardrails written without that understanding simply fail.

And when I say AI literacy, I don’t mean knowing how to build models. I mean, knowing when:

AI should be used and when it shouldn’t.

How LLMs actually generate outputs.

Why delete doesn’t mean delete.

How small, harmless-looking inputs compound into long-term exposure…, etc.

It also means having basic awareness of how data is retained, reused, and inferred, and staying current with how fast this space is moving.

This gets deprioritized because leadership is focused on growth, efficiency, and revenue. That’s understandable. But ignoring AI literacy at the decision level turns speed into structural risk. The result is policies that look responsible on paper but collapse under real-world use.

Another important thing - This isn’t just an engineering problem. Every team touches sensitive information. Taking even a small amount of time to educate executives, HR, legal, operations, and frontline staff reduces not just AI-related risk, but broader threats as well.

The same lack of understanding that leads people to overshare with models is what makes social engineering so effective. I’ve written a detailed piece on social engineering and how it exploits human behavior. If you want to stay armed in this environment, it’s worth reading:

What the Future of AI Means for Privacy

Congrats if you made it this far - in an age where attention itself has been hijacked by algorithms, staying present through an entire piece is a big thing.

Looking ahead, I believe one of the biggest shifts on the horizon is autonomous AI agents. These are systems designed to act independently, make decisions, and pursue goals with minimal human oversight. They already exist in enterprise workflows, customer support bots, scheduling assistants, and agentic browsers that can take actions based on behavior patterns rather than direct prompts

The privacy implications are profound. Autonomous agents often access and combine large datasets as part of their work, and in doing so, they can collect more than users expect - sometimes inferring sensitive details and inadvertently exposing data across systems where privacy controls are weaker.

This means deeper and less visible integrations with workflows - where your calendar, contacts, financial info, and even workplace systems become part of the agent’s decision environment without explicit, granular consent.

At the same time, regulations and governance frameworks are struggling to keep up. Even well-intentioned laws like GDPR and the AI Act are being revisited and scaled back in some regions, potentially loosening safeguards just as autonomy grows.

Now, zoom out for a second and try to notice the bigger picture.

The longer we delay designing privacy and governance into systems rather than reacting to them after they’re deployed, the more those defaults will be governed by convenience rather than individual rights.

Anyway…it’s time to wrap up

Just keep in mind - staying aware may be the single biggest weapon in the digital era. Awareness doesn’t prevent every threat, but it ensures you know what to defend against before the costs arrive.

Your Turn

If this piece made you pause - even for a moment - then it did its job.

Maybe it clarified something you hadn’t fully named before.

Maybe it challenged an assumption you were carrying without realizing it.

Or maybe it just made you more aware of what’s quietly happening in the background.

If any of that landed, I’d genuinely like to hear from you.

What changed?

What still feels unclear?

What are you questioning now?

Drop a comment. I read them, and I care about the conversations that come out of this work.

If you want more writing like this, consider subscribing and restacking. Not for the numbers, but because this is how ideas like these travel. Attention is currency online, and support is what keeps independent work alive.

Lastly, a sincere thanks to Joel Salinas for the opportunity to share this work with his audience. Platforms matter. So does trust. I don’t take either lightly.

Dive Deeper

Thank you, Mohib! Don’t get caught assuming there is full privacy where there isn’t.

Mohib gave you the technical foundation most AI conversations skip. Now here’s what I want you to do with it.

If you manage a team using AI, start asking three questions this week:

What data categories have we defined as safe versus restricted for AI use?

Which AI tools are our teams actually using (not just the ones IT approved)?

Do our people understand what happens when they delete a chat?

Most organizations I work with can’t answer these clearly. That’s not a failure. It’s just where we are. The good news is you can fix this faster than you think.

Thanks to Mohib for bringing this level of clarity to something most people treat as too technical to understand. If you want more of his work, subscribe to SK NEXUS. He’s doing something rare: making complex technology accessible without dumbing it down.

Your turn: What’s one AI tool your team uses that you’re not 100% confident about from a data perspective? Drop it in the comments. I read every single one.

Join 3,500+ Leaders Implementing AI today…

Which Sounds Like You?

“I need systems, not just ideas” → Join Premium (Starting at $39/yr, $1,345+ value): Tested prompts, frameworks, direct coaching access. Start here

“I need this built for my context” → AI coaching, custom Second Brain setup, strategy audits. Message me or book a free call

“I want to reach these leaders” → Sponsor one post (short supply). 3,500+ executives. Check availability

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Amazing topic for a very important discussion! Great article you guys, enjoyed this one very much

As always, you explained it amazingly.