Real Innovation vs Innovation Theater in AI

Part 3 of the AI Leadership Triad: Innovation that serves mission, not ego

Thanks for reading! To access our community, full GPT Agent prompt library, coaching, and AI tools saving leaders 5-10 hours per week, check out our Premium Hub.

Tomorrow, I’ll give thanks for people. My wife and 2 kids, this community that you are part of, and friends who show up when things are hard.

And as I write this, I’m thinking about what I’m grateful for when it comes to AI. But I’m not naive. AI brings fears, AI will displace jobs, the climate cost of training these models is staggering. There are real harms we can’t ignore. But I’m also watching mission-driven leaders use these tools to 10x their impact on the world, entrepreneurs launch life-saving products with a staff of 1, and non-profit leaders reaching 10s of thousands more with AI than they would without it, often in the world’s most vulnerable areas.

The question isn’t whether AI is good or bad. It’s whether we’re building the skills to use it in the service of people, not just productivity.

Which brings me to the third pillar of the AI Leadership Triad: innovation. But we need to be honest about what innovation actually means.

Creativity, as shown by Anjeanette Carter, sees what AI misses.

Adaptation, as broken down by Dennis Berry, evolves your methods while maintaining mission.

Innovation makes those adaptations systematic.

It’s how you build capability instead of just responding to change.

But most “innovation” is theater. Adopting the latest AI tool because everyone else is. Launching initiatives that look impressive on LinkedIn but create no lasting value. Real innovation asks one question: does this make us better at our core work?

I asked John Brewton to explain the difference because he advises 30,000 operating strategists who need innovation that actually works. He is a Harvard-trained economist with real operator experience. He knows innovation theater when he sees it. More importantly, he knows what works. Here’s what he shared.

By the way, John is one of my favorite authors on Substack, don’t miss out on his work.

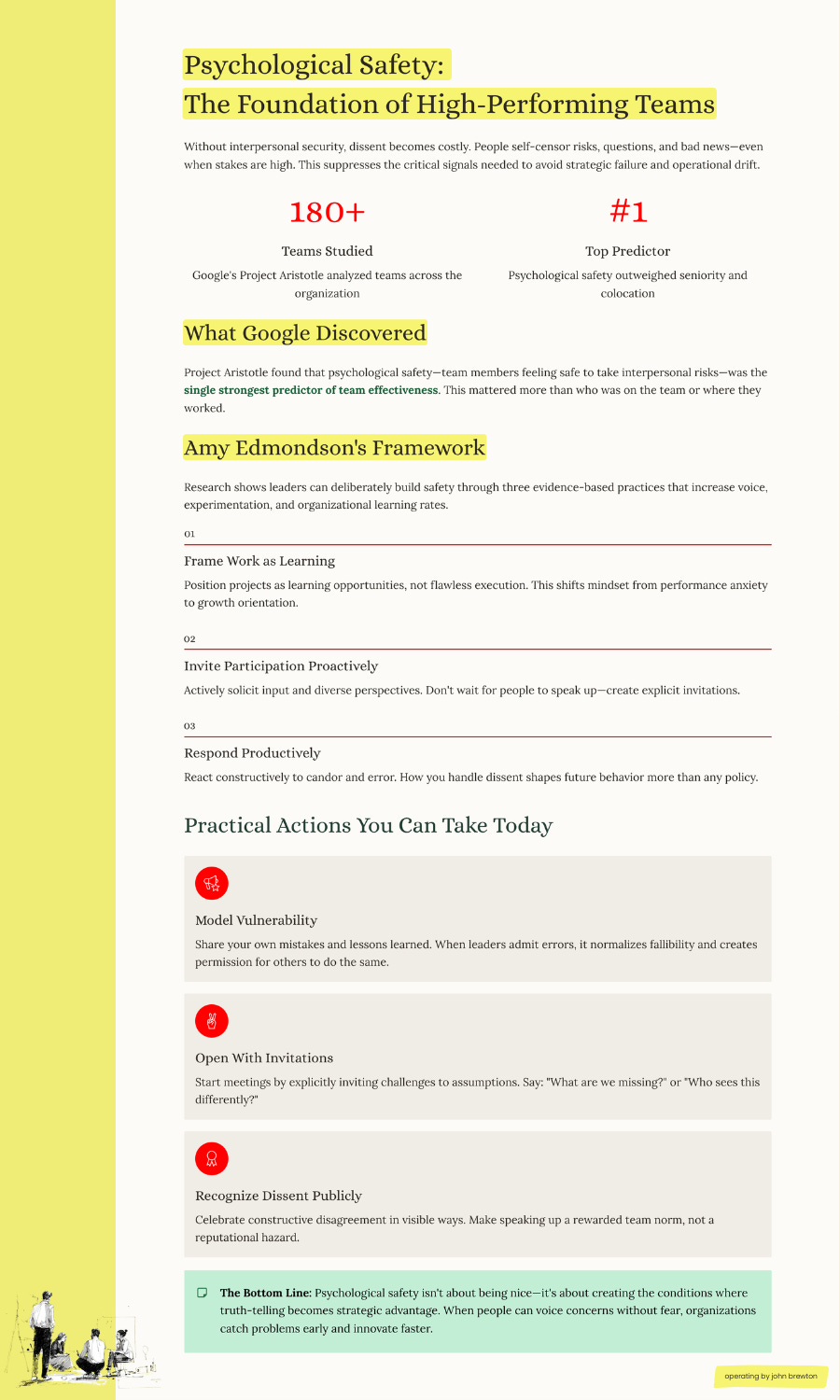

The Boring AI Skill Nobody Talks About: How Two Years of Prompt Engineering Changed Everything

Everyone asks what AI tools I use. GPT 5, Sonet 4.5, Opus 4.1, Gemini 2.5, Perplexity Research and Labs, Comet, Gamma.AI, Midjourney, Notebook LM, and so on. But after nearly two years of daily practice, I’ve realized something:

I honestly can’t tell anymore whether the tools got better or I just got better at prompting them.

The real competitive advantage isn’t my tool stack or some master system I’ve built…it’s prompt literacy, competency and execution. The accumulated knowledge of how to shape AI behavior, chain requests together effectively, and extract maximum value from any model or tool has become my superpower.

This is the most boring, most valuable skill in the AI era.

And we don’t talk about it enough.

If compound interest is the eighth wonder of the world, compounded learning from improved prompting might just become the ninth.

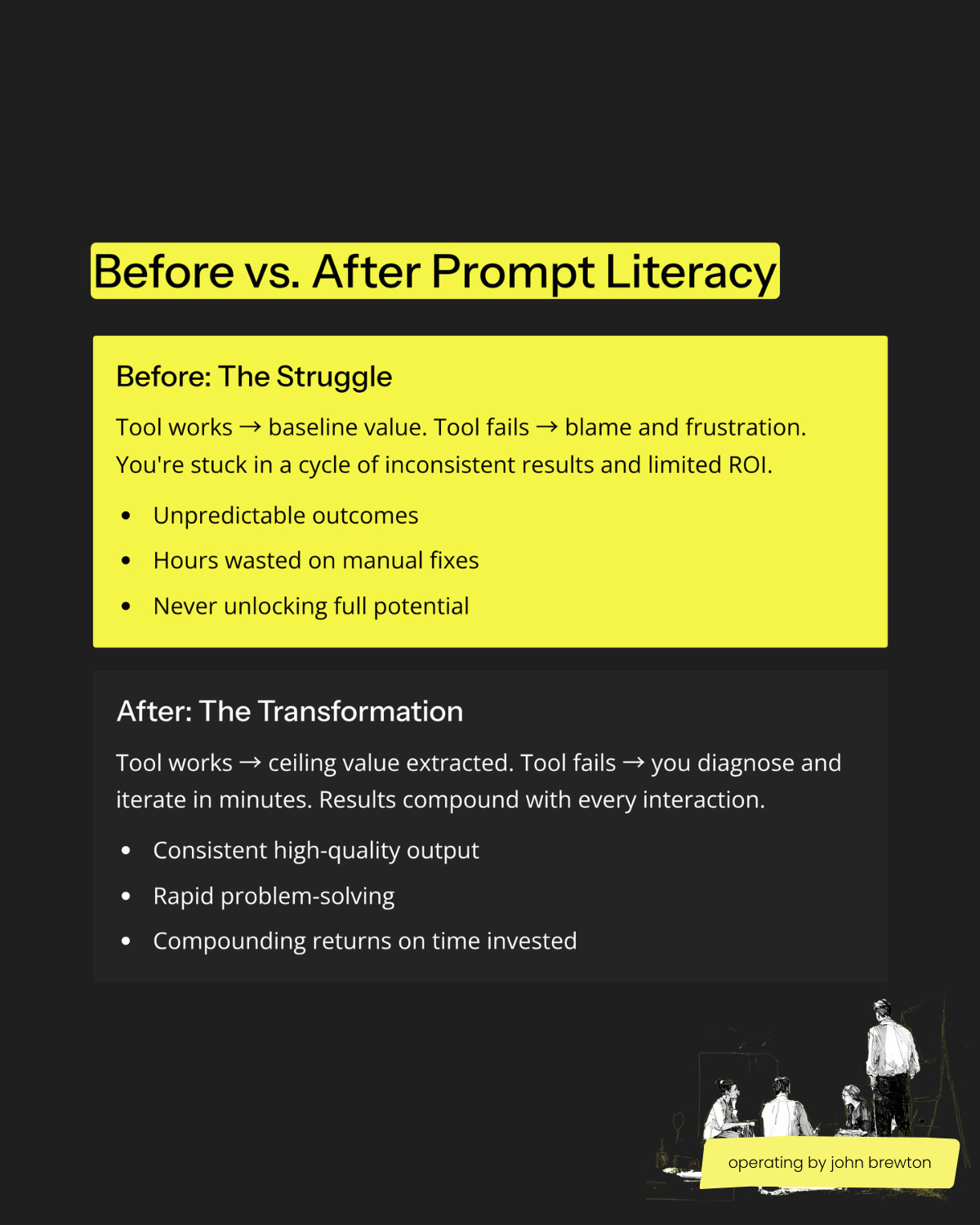

Every prompt you write teaches you something from each successive response it creates. Every failure refines your approach. Over two years, those small improvements become an exponential advantage. It’s exactly the kind of unsexy, incremental work I described in my “Boring AI” framework, mundane automation that delivers more ROI than flashy moonshots for companies everywhere right now.

The Invisible Skill That Compounds

After nearly two years of daily AI use, I’ve developed an intuitive understanding of how to structure requests for different models, when to provide context versus when to be directive, how to chain prompts to build complexity, how to build optimal prompts with the help of the models, and what each tool’s strengths and weaknesses are. I’ve become prompt-literate.

Take my LucidChart workflow as an example. I don’t simply use LucidChart’s AI assistant to create process maps. Here’s what happens:

I use Claude (Sonet 4.5 or Haiku) to help me craft a precise BPMN process mapping prompt, refined through a process mapping project I’ve built that contains examples, standards and client details.

Claude outputs an optimized prompt with proper BPMN terminology, decision point notation, and swim lane structure.

I feed that pre-optimized prompt into LucidChart’s AI assistant

LucidChart generates an optimal clearn map because it received expert, thorough instruction.

Most people feed tools mediocre prompts and get mediocre results. I’ve learned to use one AI to optimize prompts for another AI.

Is ChatGPT 5 Thinking mode actually better than GPT-4? Unquestionably. But learning to prompt better, more critically and with a sincere desire to uncover all the problematic bits of what I’m working on has created the most impact. The quality of my work output has multiplied dramatically. And the value of my time has expanded dramatically.

This matters because tool capabilities are commoditized. Everyone has access to the same models. But knowing how to use them better creates an accumulated advantage that doesn’t depreciate when the next version launches. Same as it ever was.

What Two Years of Prompt Engineering Taught Me

Learning 1: Context Architecture Matters More Than Clever Prompts

Early on, I tried “clever” one-shot prompts. Now I know that building context through projects, custom instructions, and uploaded assets does more than any single prompt ever could. I’ve learned the value of specificity and the importance of actively seeking criticism of my ideas, work plans and arguments.

My Claude and ChatGPT projects contain writing samples, style guides, and structural templates. When I prompt for an article outline, the AI isn’t working from scratch; it’s working from dozens of examples of my best work and the materials I would most want to reference if my time on this earth was unlimited and I could draw technical and creative inspiration from my most favored sources for every work task or project.

The prompt isn’t magic. The architecture around the prompt and the assets that interact with the prompt create something that, at its best, begins to feel like magic.

Learning 2: Chaining Is Where Power Lives

Single prompts are linear.

Prompt chains are exponential.

The LucidChart example demonstrates what I call vertical chaining, using Claude to craft better prompts for LucidChart’s AI assistant. But my content development workflow demonstrates horizontal chaining, moving ideas through tools that progressively refine them.

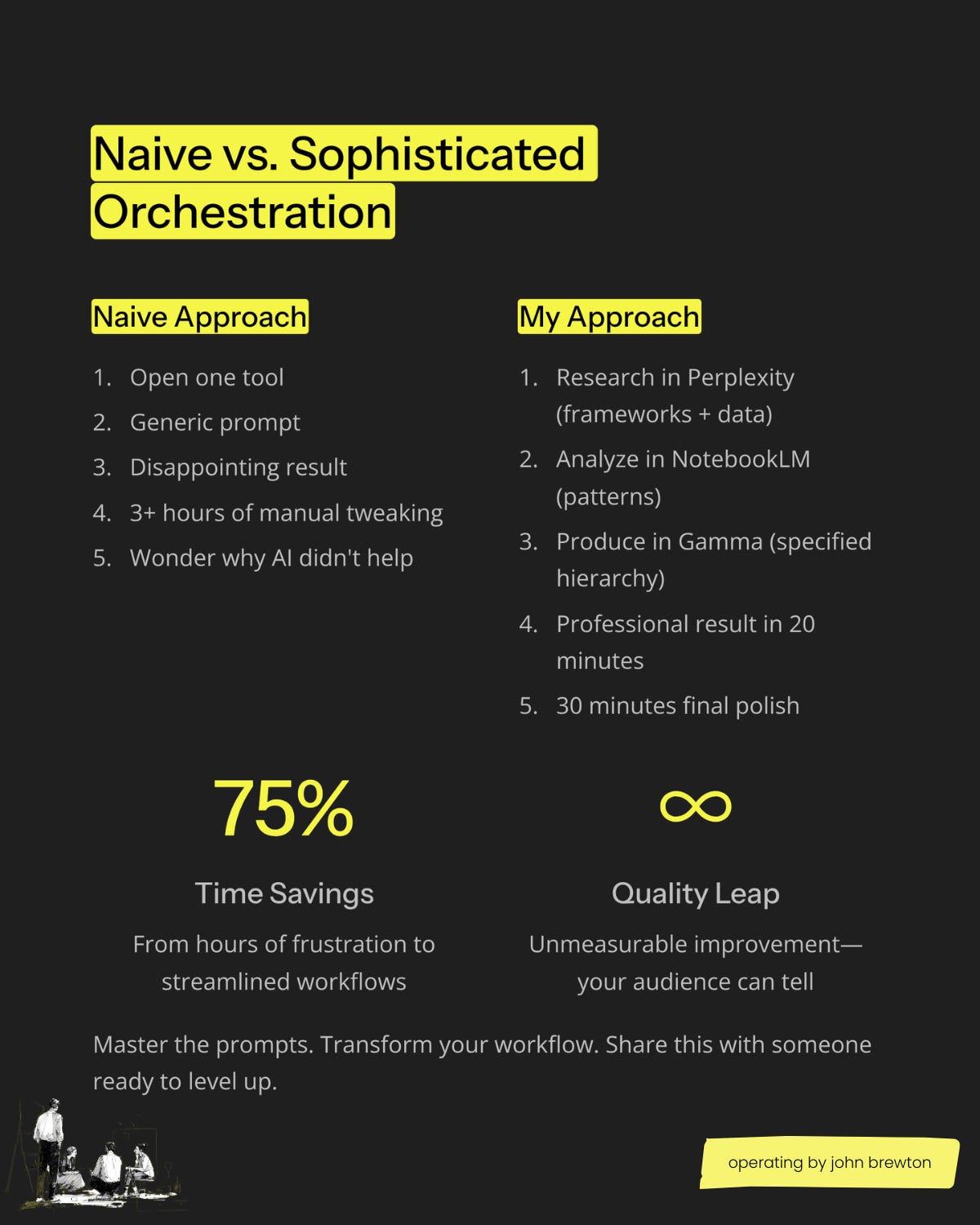

Here’s how I develop content for articles, infographics, and presentations:

Step 1: Perplexity (Research Phase)

I don’t search; I craft prompts that surface diverse perspectives (some explicitly cited, some not), call on academic sources, and practical applications. I’ve learned how to ask questions that generate usable research, not just information dumps. I’m prompting for frameworks, contradictions, and data points that will translate to visual formats later. But what is important to note about this phase is that I’m simply thinking about collecting information from diverse, credible, and useful sources. I’m not so concerned about their relationships or the structure of my arguments, YET.

Step 2: Notebook LM (Study Phase)

I use Notebook LM to study what I found. What makes NLM so valuable is its limitations. It will only respond to the assets it’s provided. It’s a closed room to experiment and discover what you’ve found. My prompts are analytical:

“What are the contradictions between these sources?”

“What frameworks emerge from this research?”

“What’s missing from this analysis?”

“How do the research methodologies between these sources differ?”

I’m using AI as a thinking partner, not a ghostwriter.

Step 3: Gamma (Production Phase)

By the time I reach Gamma, I’m not starting cold. I’m feeding it prompts, notes, and outlines that have been refined through two prior steps:

For infographics: “Create a visual hierarchy showing [framework from Notebook LM analysis] with data points from [Perplexity research]. Use progressive disclosure and ensure each section can stand alone.”

For carousels: “Transform this insight into a 10-slide carousel. Start with the counterintuitive hook, build through evidence, and end with application. Each slide should be skimmable but connected.”

For slide decks: “Create a 15-slide executive presentation on [topic]. Opening: problem statement. Body: three-part framework. Close: decision points and next steps. Balance text and visuals for boardroom consumption.”

Most people prompt Gamma with “make me an infographic about AI in business” and wonder why it’s generic. I’m prompting with pre-researched, pre-analyzed, format-specific instructions because I’ve done the intellectual work in the prior steps. Gamma isn’t doing my thinking; it’s executing my already-refined thinking into a visual format.

Each tool in the chain receives better inputs because of the work done in previous steps. This only works because I’ve learned how to prompt Perplexity for synthesizable research, Notebook LM for actionable analysis, and Gamma with enough specificity to produce professional-grade output.

Learning 3: Different Models Have Different Personalities

ChatGPT 5 Thinking mode excels at generative writing and exploratory thought. Claude dominates analytical work and follows complex structural instructions. Gemini shines in visual generation and multimodal management tasks.

I’ve learned to match prompt style to model personality. This only comes from hundreds of hours (not overstating) of comparative use. In these failed experiments, I used the wrong tool for the wrong task, or discovered that rephrasing the same request differently for each model produces dramatically different results.

Learning 4: Specificity Beats Creativity

My early prompts were: “Give me a list of 15 famous Jack Welch quotes.”

I was prompting using search syntax.

My current prompts look like this:

Help me construct an outline for a 1,500 to 1750 word article for my Substack ‘Operating by John Brewton’ that extends the ‘Boring AI’ framework by examining prompt literacy as compound interest and draws on the specific attached research assets. Use examples from my LucidChart and Gamma workflows. Target audience: business operators and strategists. Tone: authoritative but accessible. Structure: inverted pyramid with tactical takeaways.Boring specificity beats creative vagueness every time.

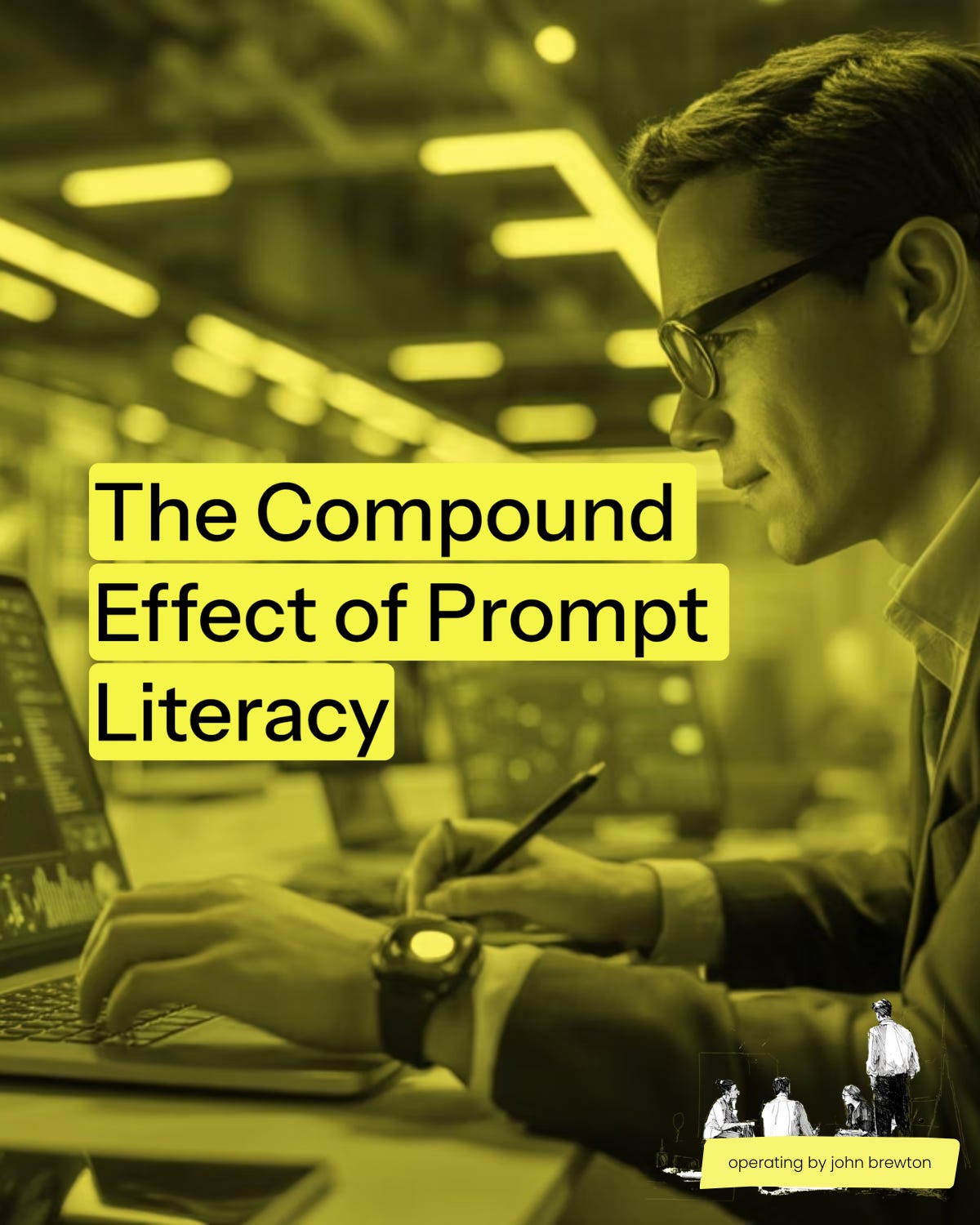

How Prompt-Literacy Multiplies Tool Value

This only works with prompt literacy. You can’t orchestrate tools effectively without understanding what each tool does well, how to structure prompts for each tool’s strengths, how to pass work between tools without losing fidelity, and when to use AI versus human judgment.

My productivity improvement wasn’t linear. Year one of AI use: maybe 20% productivity gain. Year two: probably 200%+ improvement. Not because tools got 10x better, but because my prompting got 10x more effective.

Why This Is The Most Boring AI Advantage

Everyone wants the sexy AI story:

“I automated my entire business!”

“AI wrote my book in a weekend!”

“This one weird prompt hack changed everything!”

My story is duller: “I spent two years learning to write better prompts, and now I can’t tell if the tools got better or I just got better at using them.”

But here’s why this matters to operators, founders, analysts, and strategists:

You don’t have time to become an AI expert. You do have time to improve one workflow at a time. Prompt literacy is the bridge between “AI is hype” and “AI is core to my operation.”

My competitive advantage isn’t ChatGPT 5. It’s 500+ hours of learning how to prompt ChatGPT effectively. Tools depreciate. Skills compound.

Most people give up on AI after a few mediocre results, or continue on with middling capacity to make the tools work to their advantage. Winners treat prompt engineering as skill development, not tool use. They invest in learning, not just in subscriptions.

The meta-lesson: This article exists because I’ve built prompt literacy. The research, synthesis, structure, and clarity are only possible because I know how to extract that from AI tools. The output is the proof.

The Boring Invitation

Start documenting your prompts.

Note what works.

Diagnose what fails. Build templates. Chain workflows.

Do this for six months, then a year, then two years. The tools will change. Models will improve. But your accumulated prompt literacy will compound into an unfair advantage that no one can copy, because it’s built from your specific practice, failures, and refinements.

In the age of AI, prompt literacy is the new literacy.

And like all literacy, it’s built one boring iteration at a time.

- john -

Thank you, John!!!

This completes the AI Leadership Triad. Creativity sees what AI misses. Adaptation transforms insights into sustainable change. Innovation makes it systematic.

These three skills don’t work separately. They feed each other. Creativity without adaptation creates chaos. Adaptation without innovation creates busy work. Innovation without creativity creates expensive theater.

The question isn’t which skill matters most. It’s: Do you have an intentional plan to develop all three?

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Let’s Connect

I love connecting with people. Please use the following connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

This is a masterclass in why prompt literacy, not tool choice, creates lasting AI advantage. Compounding skill beats flashy features every time.

Brilliant share guys