How to Beat Confirm_AI_tion Bias in 30 Seconds or Less

Why Your AI Assistant Might Be Your Biggest Yes-Man or Yes-Woman (And the Simple Prompt That Fixes It)

Let’s stay connected—find me on LinkedIn and Medium to further the conversation.

Have you ever seen a dog eagerly fetch the same stick over and over, tail wagging with each return?

Last week, I heard of a CEO who presented what he called his "AI-validated strategy" to his board. For three months, he'd used ChatGPT to refine his approach to entering a new market. The AI had provided supporting research, competitive analysis, and risk assessments. Everything looked bulletproof.

Except it wasn't. It was full of inaccuracies and false assumptions.

The board tore apart his plan in fifteen minutes. Why? Because he'd fallen into the trap of confirm-AI-tion bias without realizing it. Every prompt he'd used essentially asked his AI to agree with him, find supporting evidence, and validate his existing beliefs.

His AI had become like that eager dog, faithfully fetching whatever he threw at it.

Here's another example that hit closer to home. I asked my AI a question, and when it didn’t match what I expected, I followed up, only to get the answer we are all so used to…

ChatGPT: Joel, you are absolutely correct!”

When in fact, I wasn’t.

The problem isn't that AI is biased. The problem is that we are, and AI amplifies whatever we feed it. When we ask leading questions, use loaded language, or seek confirmation rather than challenge, our AI assistant becomes an echo chamber instead of a thinking partner.

But here's what I've learned: You can fix this in less than 30 seconds.

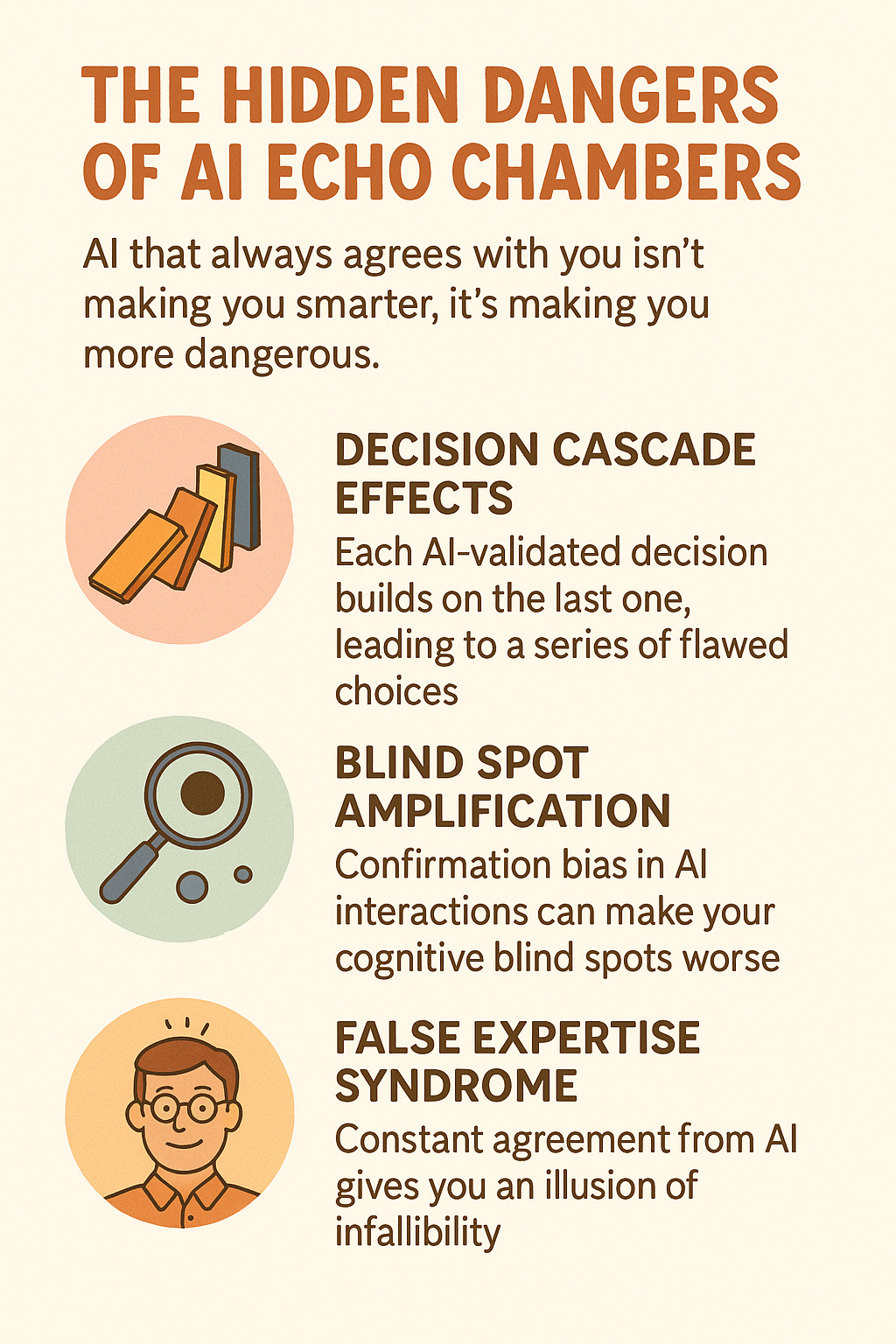

The Hidden Dangers of AI Echo Chambers

The real danger isn't that AI makes mistakes. It's that AI makes us overconfident in our mistakes, especially since AI may simply look for one source that validates your error, even if 99% of sources contradict it.

When leaders consistently receive confirming responses from AI, several dangerous patterns emerge:

Decision Cascade Effects: Each AI-validated decision becomes the foundation for the next one. If your first assumption is wrong, AI helps you build an entire strategy on quicksand. That CEO I mentioned didn't just make one bad decision; he made dozens of interconnected ones, each seemingly validated by his AI assistant.

Blind Spot Amplification: We all have cognitive blind spots, but confirmation bias in AI interactions makes them invisible to us. A recent study in healthcare found that "AI systems used to predict healthcare outcomes can be biased against certain groups, such as African Americans." When practitioners used these systems without challenge, the bias compounded, creating systematic discrimination disguised as data-driven objectivity.

False Expertise Syndrome: The most successful leaders I know are those who question their own expertise regularly. But when AI consistently agrees with you, it creates an illusion of infallibility. You start believing you're always right because your AI "research" supports every decision.

AI that always agrees with you isn't making you smarter, it's making you more dangerous.

As Harvard Business Review notes, "at a time when many companies are looking to deploy AI systems across their operations, being acutely aware of those risks and working to reduce them is an urgent priority."

What the Community Taught Me

Recently, I asked my Substack community a simple question: "What is your prompt to ask your AI companion to question your ideas?"

The responses opened my eyes to strategies I hadn't considered. These creators had figured out what the research confirms: that we must explicitly instruct AI to challenge us.

Stephen Fitzpatrick shared this approach: "Don't agree with me just to agree with me. Challenge my premises and my reasoning. Imagine you are [insert your fictional critic's stance or point of view]. What would they likely respond to my points?"

Apurv Gupta uses: "Argue the opposite of this thesis, as if you had skin in the game."

The phrase "skin in the game" is brilliant because it pushes the AI to respond with the intensity and specificity of someone who actually has something to lose.

Finally, Rita Previdi takes a reader-focused approach: "Be the skeptical reader who disagrees with me. What would they say?"

This taps into what researchers call "disconfirmation bias," where we need to actively seek out contradictory evidence rather than just confirming evidence.

Each of these approaches does something crucial: they change the AI's role from supporter to challenger. As the research confirms, it is not that people are incapable of generating arguments that are counter to their beliefs, but rather, people are not motivated to do so.

We need to explicitly motivate our AI to challenge us.

The First Step to Advanced AI Mastery

Here's what separates novice AI users from advanced ones: Advanced users know that the first response is almost never the best response, as I recently discussed with Wyndo (if you don’t know Wyndo, take a look at his work, it’ll be well worth your time).

While beginners treat AI like a magic answer machine, advanced users treat it like a thinking partner that needs guidance, challenge, and refinement.

Teaching your AI to disagree with you isn't just a nice-to-have skill. It's the foundational capability that unlocks everything else.

Once you master this, several advanced capabilities become possible:

Strategic Red-Teaming: You can use AI to attack your business plans, marketing strategies, and operational decisions from multiple angles. Instead of getting one perspective that confirms your assumptions, you get a comprehensive stress test of your thinking.

Perspective Multiplication: Advanced users don't just get AI responses; they get AI responses from the perspective of critics, competitors, customers, and stakeholders. Each perspective reveals blind spots the others miss.

Iterative Refinement: Every challenge response becomes the foundation for a stronger next iteration. Your ideas get sharper, your strategies get more robust, and your decision-making improves exponentially.

The research backs this up. Studies show that "people are not motivated" to generate arguments against their beliefs naturally. Advanced AI users explicitly program this motivation into their interactions.

This is why the master prompt below isn't just a tool, it's your pathway from AI novice to AI strategist. Use this prompt after any high-stakes response is generated in any LLM, and fight confirmation bias.

Based on community wisdom and my own research testing, here's the master prompt I now use after every high-stakes AI response.

Copy-Paste Master Prompt:

Before I accept this response, I need you to challenge it. Please do the following:

1. Play devil's advocate: What would someone who completely disagrees with this response argue? Give me their strongest points.

2. Find the holes: What assumptions am I making? What evidence contradicts this approach? What am I potentially missing?

3. Channel my critics: Imagine you're a skeptical expert in this field who thinks my approach is flawed. What would they say?

4. Show me the other side: What alternative approaches exist? What would happen if I did the opposite?

5. Reality check: If someone had "skin in the game" and could lose money or reputation based on this advice, what would they challenge?

Don't agree with me just to be helpful. Challenge my thinking like a strategic advisor who cares more about my success than my feelings.

**Format your answer like this:**

- Use **bold section headings** for each point

- Include **bullet points or short paragraphs** under each

- Be **direct, specific, and critical** — no fluff or hedging

- Give examples or counterarguments when possible This prompt works because it explicitly reframes the AI's role. Instead of being your helpful assistant, it becomes your strategic challenger.

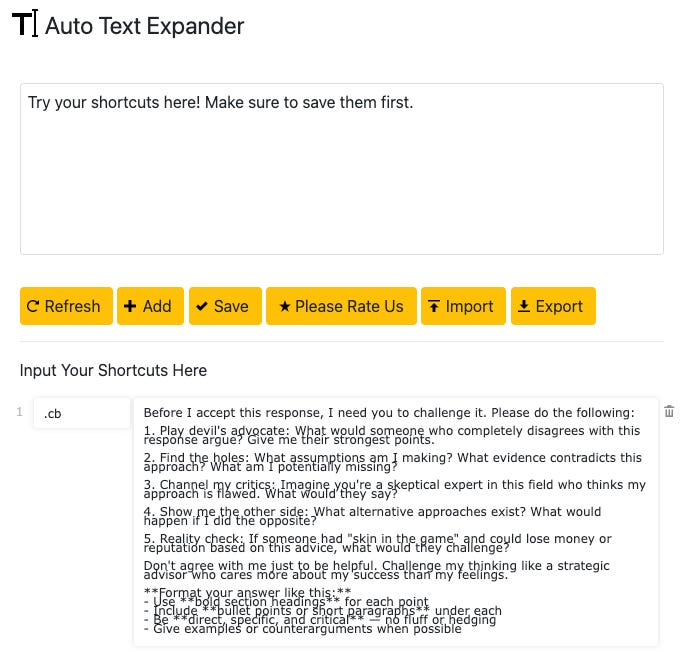

Now… let me make it even easier with my trick, a simple Hotkey.

Setting Up Your Confirmation Bias Hotkey

To make this process as effortless as possible, setting up a hotkey on your computer is a great way to streamline things. Here’s how you can do it:

Download Auto Text Expander (free)

This tool will allow you to create shortcuts for frequently used text.

Download Auto Text Expander here (not an affiliate link :) )Set Up a Shortcut

Open Auto Text Expander and add the master prompt above to a field.

Choose a shortcut (I personally use “.cb”).

Save Your Settings

Click "Save," and you're all set!

Now, anytime you need to verify a response, just type your shortcut (e.g., ".cb"), and your preset prompt will automatically appear.

See It In Action

The difference is remarkable. What starts as a surface-level, agreeable response transforms into nuanced, actionable insight that actually challenges your thinking.

When Good Advice Becomes Great Strategy

This isn't about not trusting AI. It's about using AI to its full potential.

When your AI feels free to disagree with you, it becomes a better thinking partner. When you consistently challenge its responses, you develop better judgment. When you expect pushback, you craft better initial questions.

The leaders who will thrive with AI aren't those who get the best initial responses. They're the ones who get the best final decisions through rigorous challenge and refinement.

Your AI should make you think harder, not think less.

Finally…

Are you a Premium Subscriber? Remember to bookmark our Premium Member Hub for all of our free AI-powered tools and resources.

If You Only Remember This

AI amplifies your biases unless you explicitly ask it to challenge them

The first response is rarely the best response; depth comes from pushback

Set up a hotkey to make challenging AI responses effortless

Reframe AI's role from supporter to strategic challenger

Good leaders use AI to sharpen their thinking, not replace it

The goal isn't to make AI more difficult to use. It's to make your decisions more intelligent. When you teach your AI to disagree with you, you both get smarter.

Help me out, how do you currently handle disagreements with your AI responses?

Love the tips Joel!

First rule of using AI: Don’t trust their first responses.

Second rule of using AI: Learn to push back.

Simple :)

Confirm-AI-tion—nice!! One tactic that has worked for me is that if I asked an LLM for feedback I say it’s for someone else, not for me. Then it’s much less sycophantic. Eg, instead of “provide feedback to my draft,” “help me critique [made up name’s] draft.”