Don't Outsource Your Thinking (Even to AI)... But Do This Instead

Why Leaders Must Fact-Check AI Sources Before Critical Decisions

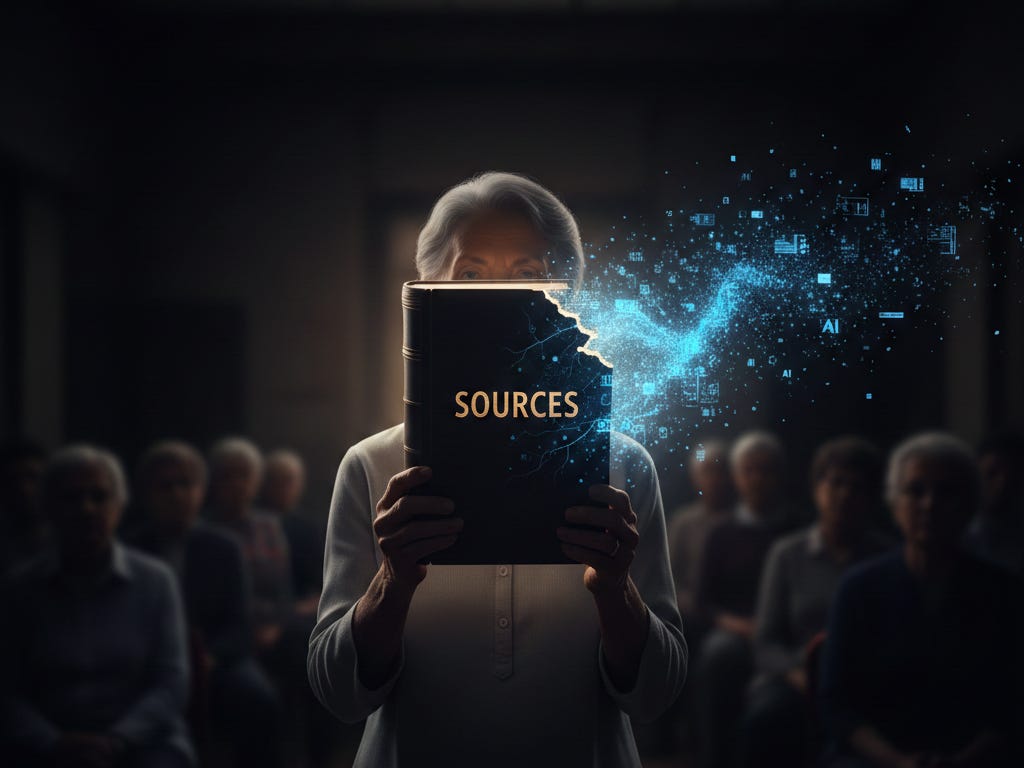

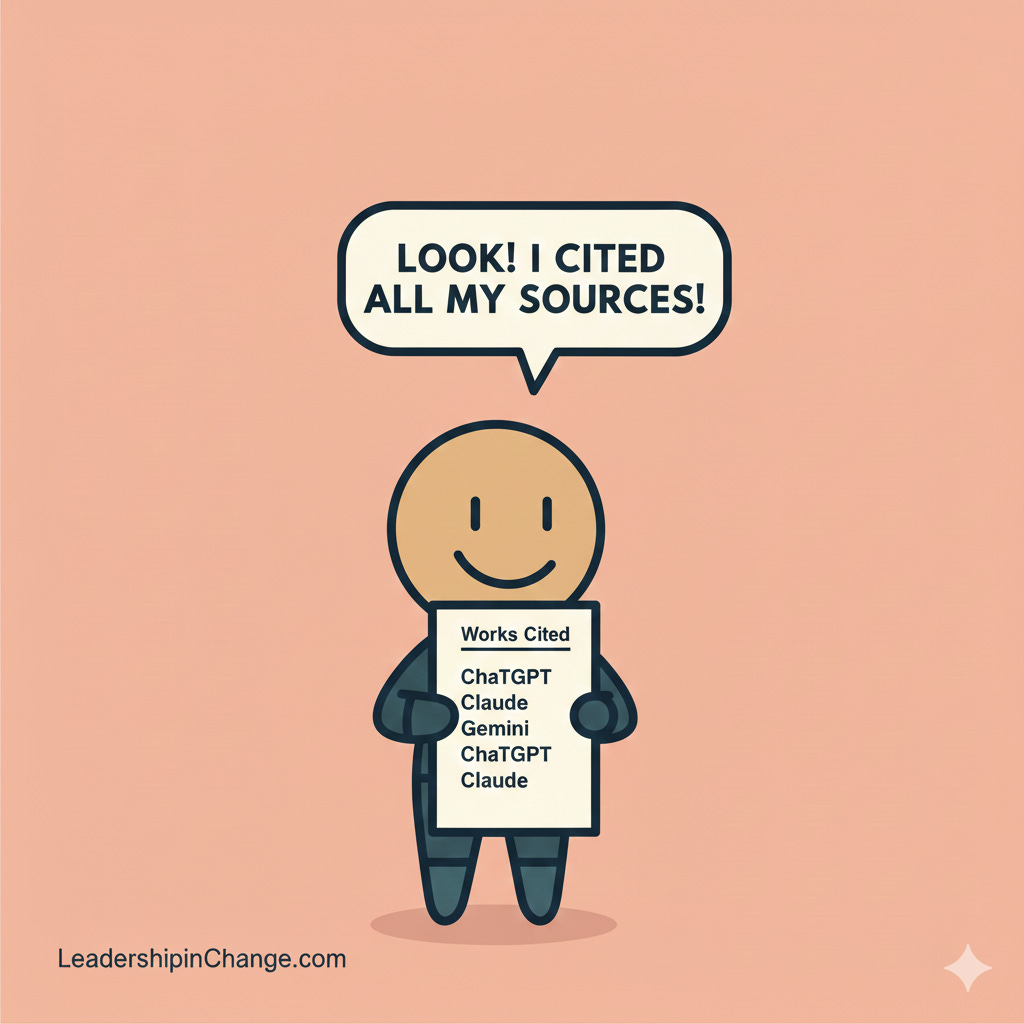

In May 2025, the Chicago Sun-Times published a reading list with 15 book recommendations. Ten of those books didn’t exist; they were AI hallucinations the writer never fact-checked.

As I was writing this article, the same thing almost happened to me.

While researching this article, I asked Claude to help me research 2 additional statistics for this intro. It confidently provided two compelling data points: a Boston Consulting Group study showing “64% of knowledge workers use AI regularly, but only 12% can identify incorrect information,” and MIT research revealing “a 37% decline in error detection ability after six months of AI use.”

Both statistics were completely fabricated.

The AI made them up. They sounded credible. They supported the narrative perfectly. And if I hadn’t asked Claude to verify them, those fake statistics would be in this article right now.

This is what the research actually shows:

A Harvard-BCG study of 758 consultants found that when professionals used AI for tasks outside its capabilities, they were 19% less likely to produce correct solutions than those working without AI.

The real danger isn’t that AI makes mistakes. It’s that we’ve stopped checking.

Today’s guest author, Rodney Daut, has spent 12 years building the discipline to catch these mistakes. As founder of Course Builder’s Corner (1,000+ subscribers) and creator of the “Atomic Courses” framework, Rodney reduced his course creation time from 4 months to 3 weeks by learning exactly which skills to delegate to AI and which to guard fiercely. A USC graduate who’s helped hundreds of experts monetize their knowledge, Rodney discovered that the leaders who thrive with AI aren’t the ones who use it most, they’re the ones who know when to verify, when to challenge, and when to trust their own judgment.

In this article, Rodney shares:

The three skill categories every leader must evaluate before delegating to AI

Why the “human-first approach” prevents AI from replacing your strategic thinking

How to spot the warning signs you’ve crossed from AI assistance into AI dependence

The framework for deciding what to delegate, what to learn, and what to protect

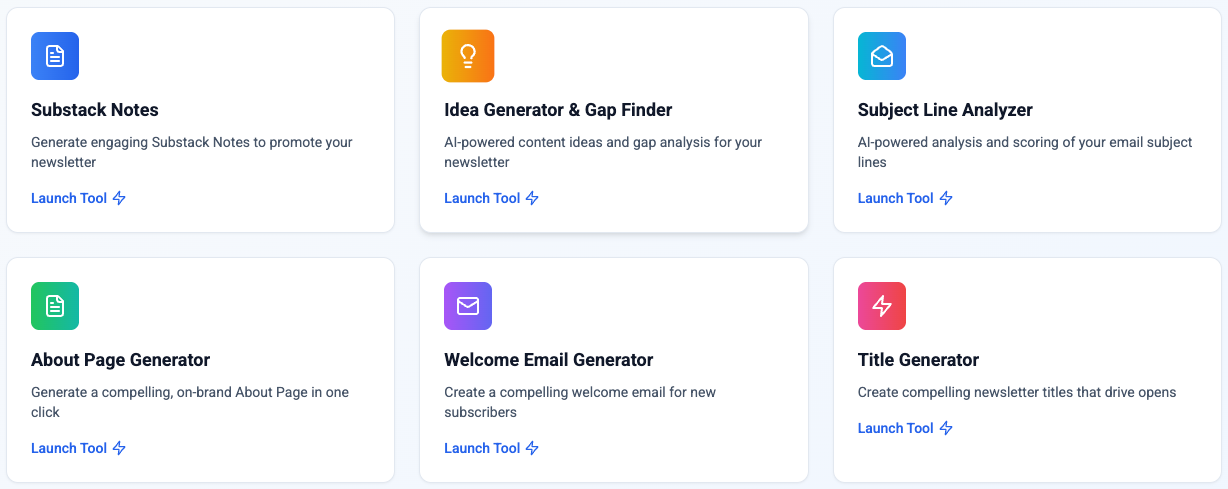

NewsletterCompass.com is here! Every hour you spend on newsletter busywork is an hour not creating. Newsletter Compass fixes that. Use code WELCOME for 50% off during launch.

Here is Rodney Daut…

Why You Can’t Outsource Your Thinking (Even to AI)

In May 2025, the Chicago Sun-Times published a summer reading list in their “Heat Index” supplement. The article featured 15 book recommendations—carefully curated titles from acclaimed authors like Isabel Allende, Andy Weir, and Min Jin Lee.

There was just one problem.

Ten of those books didn’t exist.

Tidewater Dreams by Isabel Allende? Nonexistent.

The Last Algorithm by Andy Weir? Made up.

Nightshade Market by Min Jin Lee? Complete fiction.

The writer had delegated the entire job to AI and trusted it completely. He didn’t fact-check. He didn’t verify. He just published.

This isn’t a cautionary tale about a lazy writer. It’s a wake-up call about what happens when we stop doing the thinking ourselves.

The question nobody wants to answer

Here’s what I keep hearing from clients, colleagues, and people in my network:

“Why should I learn copywriting when AI writes better copy than I ever could as a beginner?”

“Why should I learn data analysis when AI can analyze faster and more thoroughly?”

“Why bother learning [insert skill here] when AI is already better at it than I’ll be for years?”

It’s a fair question. And the answer isn’t as simple as “because you should” or “because AI will replace you if you don’t.”

The real answer is more nuanced—and more important.

What we’re really risking

When that writer trusted AI to create his reading list, he wasn’t just risking embarrassment. He was revealing something deeper: he’d stopped doing his due diligence.

He could have fact-checked. He should have fact-checked. But he trusted AI completely and skipped that step.

This is what happens when we treat AI like an infallible expert instead of a tool that needs oversight. We stop questioning. We stop verifying. We hand over responsibility for our work to a machine that can’t be held accountable.

And here’s the thing—this applies whether you’re delegating to AI or to a human being.

Let’s say you hire someone to write sales copy for you. If that copywriter does everything from research to strategy to execution, and you don’t take notes on what they’re doing, then you don’t know what information to give AI when you want to use it later. You don’t know the frameworks they were using to guide their decisions. You can’t evaluate whether the work is good or not.

I learned this the hard way recently. I use AI for copywriting—my clients know this and are impressed with the results. But I use custom GPTs loaded with examples from master copywriters and proven frameworks from the last 100 years. ChatGPT out of the box would never produce the same quality.

And even with those custom tools, I still catch things. I’ll review a piece the next day and spot subtle flaws—language patterns that aren’t quite right, claims that are overstated, energy that’s off in certain places. These are things I would have missed if I hadn’t spent years learning the craft myself.

The trap of “AI can do it better”

I recently worked with someone who wanted to use AI to create book covers. They figured, “Why pay a designer when AI can do it?”

Here’s what I told them: I used to make my own book covers. Then I decided to hire people on Fiverr who are really good at design and say, “Can you do this for me?” They do an excellent job.

Later, I tried using AI for covers. It was never quite right. I’d rather spend the money to get the job done well by a human I can talk to and say, “This is why it doesn’t feel right.” They have a track record. They understand nuance.

With AI? It’s hit or miss. And it’s time-consuming. And it’s not time well spent for me.

Here’s what I see happening: people are taking on jobs they wouldn’t have taken on before because “AI can do it.” They’re becoming jacks-of-all-trades, except they’re missing one crucial trade—strategy.

It’s okay to be a jack-of-all-trades as long as one of your trades is figuring out the big picture. Determining objectives. Creating plans. Knowing where to deploy your time for maximum impact.

But here’s the problem: if you’re not careful, AI can end up replacing your thinking entirely.

The difference between a tool and a crutch

Think about Google for a second. When you can’t remember who starred in Saving Private Ryan, you Google it. Easy. Done.

We all got comfortable treating Google as an answer machine for factual questions. Then we started using it for opinion-based questions too— “Which is better for note-taking: Evernote or Obsidian?” You’d get a range of human opinions. Multiple perspectives.

But when you ask AI the same type of question, depending on how you ask it, AI might give you just one straight answer instead of multiple perspectives. And unless you specifically ask for pros and cons, or multiple viewpoints, you might not realize you’re only getting one angle.

Even worse, AI will tell you things that sound like what you want to hear. It might hallucinate answers. And unless you know enough to spot the hallucination, you’ll accept it.

Here’s an example: if you had a botanist with a PhD in front of you, and you asked them about how plants move water and nutrients through their structures, you’d trust their answer. You wouldn’t need to fact-check a PhD talking about their area of expertise.

But if you ask AI the same question, you need to fact-check it. Because AI will tell you everything with equal certainty—whether it’s right or completely made up.

A good professional will tell you what’s certain and what’s not. AI won’t, unless you train it to do so.

So what should you actually learn?

This is where it gets tricky. Because I’m NOT saying you need to learn everything deeply. That’s impossible.

Instead, I think about three categories of skills:

1. Core Skills: The things you absolutely must learn deeply

These are skills central to your work. If you’re a doctor, you diagnose patients yourself—you don’t hand that off to AI. If you’re a coach, you do the coaching. If you’re a writer, you write.

Yes, you can use AI to support these skills. But you can’t let AI replace them, or your abilities will atrophy.

I know someone who’s a bodybuilder—not professional, just dedicated. There was a period when he stopped training for a few months after his first kid was born. Our whole family noticed he’d gotten smaller. Fortunately, he got back to it and bounced back quickly.

But the same thing happens with your mind. Ask anyone who was fluent in a foreign language 20 years ago but hasn’t spoken it since. They struggle to find the words. The memories fade.

If you stop using your core skills, they fade too.

2. Instrumental Skills: Learn enough to direct and judge

These are skills that support your work but aren’t central to it. Maybe you’re a coach who needs to write sales pages. Or a consultant who needs to analyze customer data.

For these skills, you need to learn enough to:

Know the language and frameworks of the field

Direct AI effectively

Spot when AI goes wrong

Recognize overuse of patterns or techniques

I’ll give you an example. When I use my custom GPT for copywriting, I sometimes see it overuse “if X, then Y” constructions. Or make claims that sound too big, too vague. I have to tell it to soften claims to match reality, to match what testimonials actually say.

I can only do that because I spent 20 years learning copywriting. If you’re just starting out, I’d say: read one really good book on copywriting. Study it thoroughly. Let AI quiz you on it. Practice a little. See how hard it is.

Then use AI to help you—but never let it do all the thinking.

3. Joy Skills: Keep these for yourself

There are some things you just love doing. The process itself matters, not just the output.

If you’re a poet, would you really want AI writing your poetry? Even if AI learned your style perfectly? Probably not. Because you’re proud of that work. You love the process of refining each word, removing excess, finding exactly the right phrase.

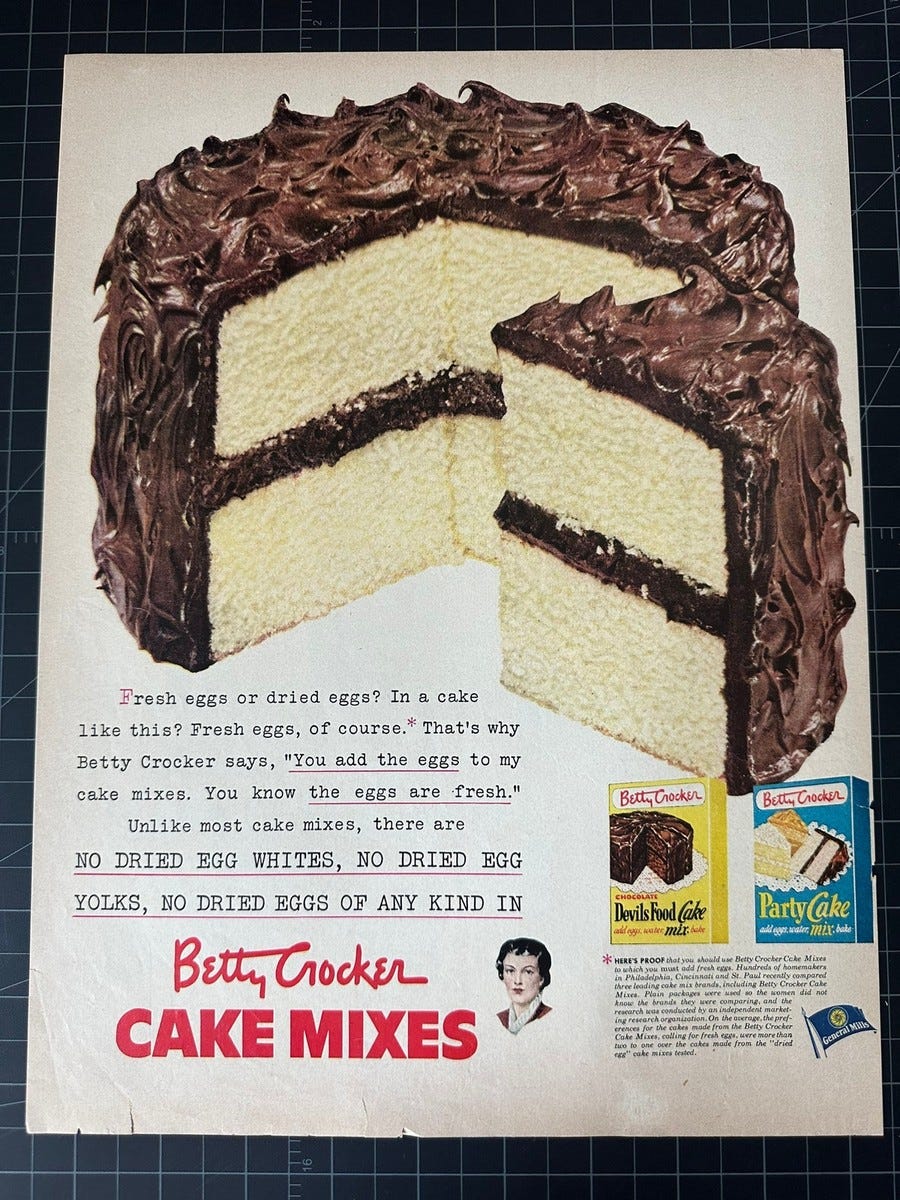

There’s a great story about Betty Crocker cake mix. When it first came out, all you had to do was add water. But hardly anyone bought it. They felt like they didn’t do anything, and a cake is a gift. They didn’t make it.

So Betty Crocker changed the formula. Now you had to add eggs and milk yourself. Sales went up. People felt like they’d contributed something. They felt proud.

Don’t let AI rob you of your pride.

The human-first approach

Here’s what I recommend: always think with your human brain first. Wetware before software.

When a client says, “I need to brainstorm titles for my book,” I don’t immediately open ChatGPT. We brainstorm ideas first. We talk about what we like or dislike about each idea. We write it all down.

THEN we give all of that to AI—our ideas, what we liked best and why, what we’re still looking for. The AI gives us much more targeted answers because we did the human thinking first.

We might also ask it: “Give us something completely different than anything we’ve asked for.” Because if we brought in a second expert, we’d want their fresh perspective too.

This is the creative partnership. You think first. AI critiques your thinking. AI offers alternatives. You compare and evaluate.

Never let AI do all the thinking for you.

One more thing: if you’re going to have AI do analysis—whether it’s data, customer feedback, whatever—you need to analyze some of that data yourself first. Get a feel for what’s in there. Then, when AI tells you something that feels off, you’ll notice. You’ll be skeptical enough to ask for evidence.

This is especially important for surprising conclusions. Or for conclusions that support what you already believe. Both can lull you into trusting AI without questioning it.

What I’m seeing work

There’s a teacher on LinkedIn named Paul Matthews who teaches AI to educators. One of the things he does is use AI for tasks teachers wish they had time to do—like differentiating reading levels.

Teachers know students learn better when reading is at the right level for them. But creating multiple versions of the same text at different reading levels? That takes forever. AI can do it in seconds.

AI can also create cloze activities (where key words are removed and students fill them in). It can generate multiple-choice quizzes. All the tedious stuff that used to eat up hours.

So what does Paul do with the time he saves? He talks to parents. He has one-on-one conversations with students. He does the interpersonal work that only humans can do—the work that matters most.

That’s the beautiful paradox of AI:

Use it to do what AI can do, so you can do what only humans can do.

What this means for you

AI isn’t going away. It’s going to keep getting better. And yes, there might come a day when the costs change, when unlimited access isn’t so cheap anymore. If you become completely dependent on AI and can’t do your work without it, you’re vulnerable.

But the bigger risk isn’t cost. It’s losing your ability to think, judge, and create independently.

So here’s what I’m suggesting you do:

Decide on one way you’re going to experiment with using AI more intentionally. Maybe it’s adopting the human-first approach—doing your thinking first, then using AI to critique and expand on it. Maybe it’s finding one tedious task AI can handle so you have more time for the human work that matters.

And decide on one way you’re going to limit AI use. One area where you’re going to say, “You know what? I’m not going to use AI for this particular type of thing.” Maybe it’s your core skill. Maybe it’s something you love doing. Maybe it’s something where judgment matters more than speed.

The goal isn’t to use AI for everything or nothing. The goal is to use it wisely—so you have more time, more energy, and more capacity for the work only you can do.

Thanks, Rodney Daut!

If You Only Remember This:

Your core skills require direct practice, not just AI assistance.

Learn instrumental skills deeply enough to direct and judge AI’s work.

Always think with your human brain first. Wetware before software.

“Use AI to do what AI can do, so you can do what only humans can do.”

The beautiful paradox: The better you get at core skills, the more effectively you can use AI.

Join 3,500+ Leaders Implementing AI today…

Which Sounds Like You?

“I need systems, not just ideas” → Join Premium (Starting at $39/yr, $1,345+ value): Tested prompts, frameworks, direct coaching access. Start here

“I need this built for my context” → AI coaching, custom Second Brain setup, strategy audits. Message me or book a free call

“I want to reach these leaders” → Sponsor one post (short supply). 3,500+ executives. Check availability

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

A key takeaway here is the risk of overreliance on AI. The more we depend on it without questioning or verifying, the more we slowly distance ourselves from our own critical thinking.

One practice I follow is avoiding prompt templates. Instead, I begin by adding my own thoughts, context, and perspective. That makes it easier to critically evaluate both the question I’m asking and the AI's response.

As a freelance writer with decades of experience, I def don't need AI to write for me, but I do love the brainstorming capacity. However, as you reference - AI without truly solid prompting, can really go off the rails. It also seems to get 'stuck' in a response and won't move off it. It's like a good office assistant who occasionally order 15 pizzas because they forgot they already ordered 15 a few minutes earlier.