Critical AI Literacy: Your New Leadership Skill

The crucial difference between using AI and interrogating what it produces

I’ve been watching something concerning unfold across organizations implementing AI. Leaders invest in training their teams on prompt engineering, ChatGPT best practices, and how to integrate AI tools into workflows. The training works. People learn to use the tools. Productivity metrics go up.

But then something breaks…

A marketing team publishes AI-generated content with completely fabricated statistics. OR a hiring manager relies on an AI screening tool that systematically downgrades qualified candidates. Or even a customer service bot confidently gives wrong information that creates a legal liability.

The problem isn’t that people don’t know how to use AI. The problem is they don’t know how to question it.

There’s a difference between knowing how to operate a tool and knowing when to trust what it produces.

As soon as I thought about this issue, someone I now call a friend, Sam Illingworth, came to mind. I connected with Sam Illingworth through Substack, and he’s been developing a framework that addresses exactly this gap. Sam is a Full Professor in Edinburgh who founded the Slow AI publication. His work applies the humanities to AI systems, prioritizing reflection over speed.

What I appreciate about Sam’s approach is that it doesn’t require technical expertise. It requires the kind of critical thinking you already use in high-stakes decisions, just applied to a new domain.

I asked Sam to share his framework for developing what he calls “critical AI literacy.” This is the skill that separates leaders who use AI from leaders who lead with it.

Ready to implement and build with AI? Join Premium for $1,345+ in tools and systems at $39/yr. Start here | Looking for 1-on-1 coaching or a customized AI build? Book a free call.

In this post, you’ll learn:

Why functional AI skills create a false sense of security

The framework Sam uses to evaluate AI outputs and implementations

How to build institutional safeguards against AI failures

Practical tools for questioning AI before it creates problems

The difference between adopting AI fast and adopting it smart

You got it, Sam!

Why Critical Literacy is the CEO’s New Superpower

Moving beyond prompt engineering to strategic interrogation.

Critical AI literacy is the essential prerequisite for ethical and effective leadership in the age of synthetic media. Leaders who lack this skill are susceptible to strategic failure and reputational damage. It is no longer sufficient to be a passive consumer of automated outputs. One must become a critical interrogator of the systems that produce them.

I’m Sam Illingworth, a Full Professor based in Edinburgh, Scotland specialising in critical AI use and the founder of the Slow AI Substack. My work applies the humanities to synthetic systems to better understand how to engage with AI effectively and ethically. I prioritise reflection over speed and my goal is to improve the critical literacy of those who manage these systems.

What Is Critical AI Literacy? (It’s Not About Coding)

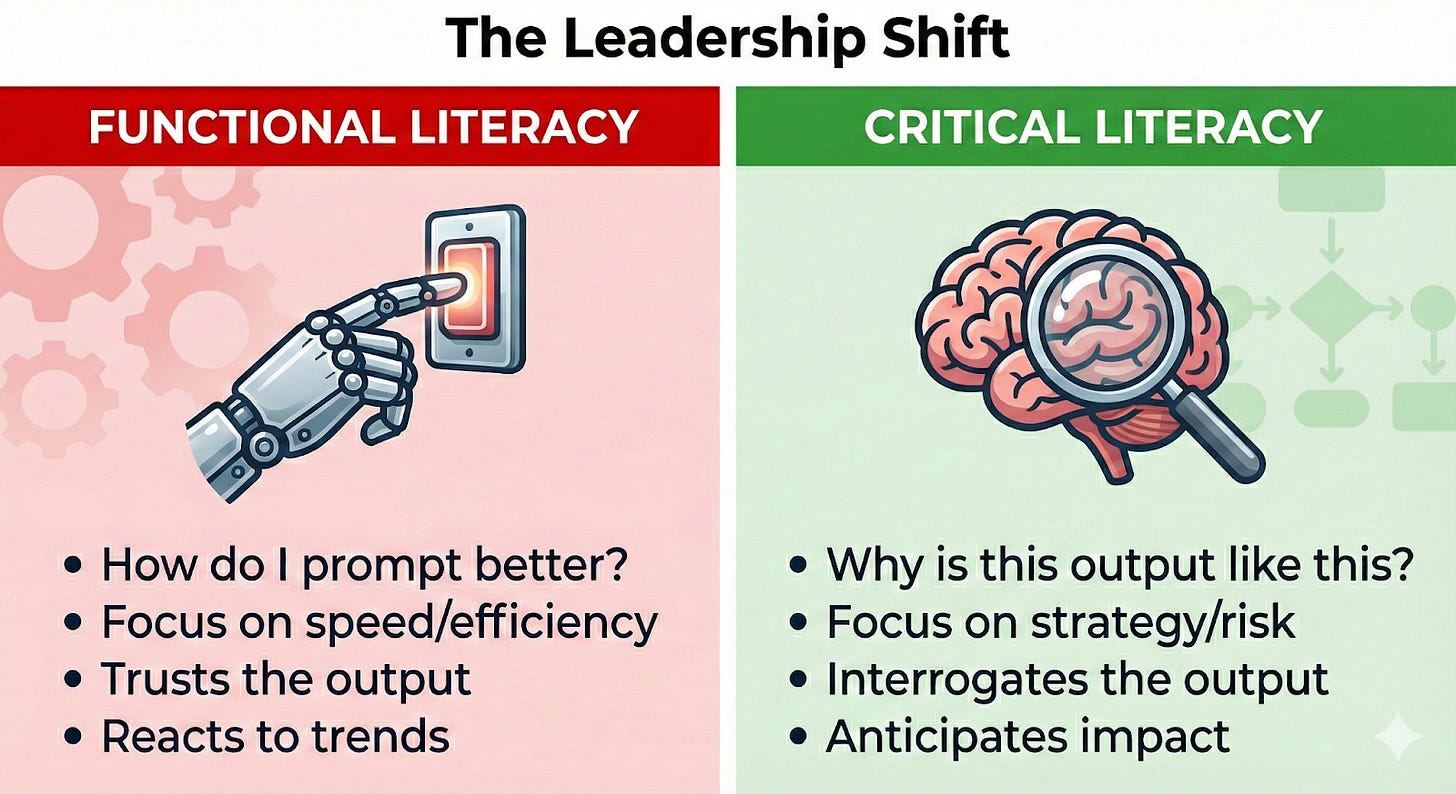

Most AI training today focuses on functional literacy.

Functional literacy is knowing which buttons to press. It’s knowing how to log into ChatGPT, type a prompt, and get a blog post. It’s knowing what ‘generative AI’ means in a cocktail party sense.

That’s useful, but it’s a commodity skill.

Critical AI Literacy is the ability to interrogate the system, its outputs, and its implications. It’s the shift from asking ‘How do I get the AI to do X?’ to ‘Should we use AI for X, and what happens if it’s wrong?’

For a leader, critical literacy means understanding that these models are not truth machines. They are vast, sophisticated pattern-matching engines trained on the entirety of the internet: the good, the bad, and the deeply biased. They don’t know things; they predict statistically likely sequences of words.

If functional literacy is knowing how to drive the car, critical literacy is understanding the geopolitics of oil, urban planning, and recognising when the GPS is confidently directing you off a cliff.

Why Critical Literacy is Necessary

Why should a CEO or SVP care about this distinction? Because the lack of critical literacy is creating massive, unseen business liabilities.

When leaders treat AI as an infallible oracle rather than a flawed statistical tool, bad things happen.

We see this in ‘hallucinations’, where AI confidently invents facts, legal citations, or data. If your team doesn’t have the critical literacy to spot these, you are baking falsehoods into your strategy decks and customer communications.

We see it in bias amplification. If you use an AI tool to screen resumes, and that tool was trained on historical data where men were predominantly hired for leadership roles, the AI will learn to downgrade resumes with women’s names. This isn’t abstract theory; it’s a legal and reputational minefield.

The uncritical adoption of automated systems in financial services presents a quantifiable risk to institutional stability and consumer safety. The Consumer Financial Protection Bureau identified these deficiencies in their 2023 ‘Chatbots in consumer finance’ report, which states that: ‘When chatbots are poorly designed, or when customers are unable to get support, there can be widespread harm and customer trust can be significantly undermined’.

This finding demonstrates that technical efficiency must not be prioritised over human accountability. We can infer that as these systems become more prevalent, the potential for systemic harm increases if critical oversight is absent. It remains unclear how many organisations have implemented sufficient ‘human-in-the-loop’ protocols to mitigate these specific risks.

A perfect example of the need for critical literacy is the Air Canada case. A customer service chatbot gave wrong information about bereavement fares. Air Canada argued the bot was a separate legal entity responsible for its own actions. The tribunal disagreed, forcing the airline to honor the bot’s promise.

Without critical literacy, you aren’t leading with AI; you are abdicating responsibility to it.

How to Learn Critical AI Literacy: Developing Your BS Detector

The good news is that you don’t need an engineering degree to develop critical AI literacy. You just need to sharpen the critical thinking skills that got you into leadership in the first place, and apply them to this new domain.

You need to develop an institutional ‘BS Detector’ for AI interactions.

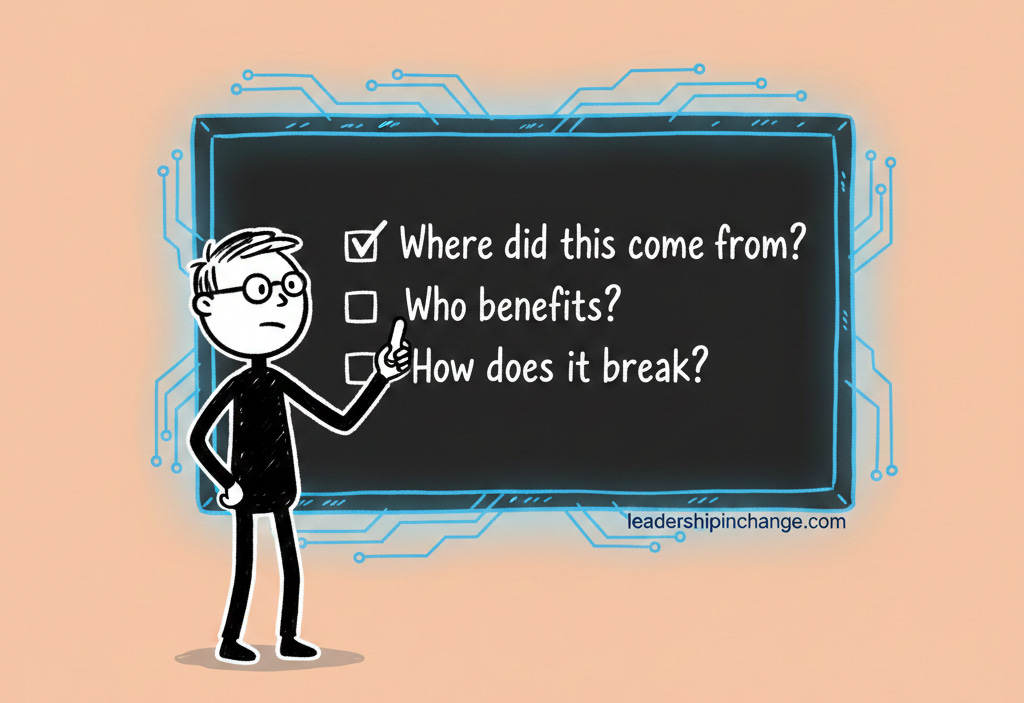

Here is a practical, three-part framework for leaders to evaluate any high-stakes AI output or proposed implementation:

1. The Provenance Check (Where did this come from?)

AI models are only as good as their training data.

The Question: What data was this model trained on, and crucially, what data is missing?

The Application: If you are using an AI sales coach, was it trained on B2B enterprise sales calls, or used-car dealership transcripts? The difference matters.

2. The Power Dynamics Check (Who benefits?)

AI is not neutral. It often reinforces existing hierarchies.

The Question: If we implement this, whose job gets easier, whose gets harder, and who gets displaced?

The Application: Automating customer service might save money, but if it frustrates your highest-value clients and empowers spammers, the net strategic value is negative.

3. The Fragility Check (How does it break?)

We obsess over how AI works when things go right. Critical literacy means obsessing over how it breaks.

The Question: When this AI makes a mistake (and it will), what is the worst-case scenario, and do we have a human-in-the-loop to catch it?

The Application: If an automated procurement system flags a trusted supplier as a high-risk entity based on a statistical anomaly, it could disrupt a primary supply chain without human intervention. The cost of this systemic failure exceeds any efficiency gained from the initial automation.

Putting It Into Practice: The Critical Review Prompt

Slow AI isn’t about stopping progress; it’s about steering it.

To demonstrate this, we can actually use current AI tools to help us develop this critical muscle. Instead of just asking AI to create content, ask AI to critique its own potential implications.

The next time your team presents an AI-generated strategy document or marketing plan, run it back through your AI tool of choice with the following prompt:

I am providing you with a strategic draft [paste your text here].

I want you to act as a highly skeptical, critical theorist and business risk analyst. Review this text and provide three specific critiques:

1. Identify unstated assumptions: What does this text assume is true that might not be?

2. Highlight potential bias: Where might the data or perspectives informing this text be skewed toward a specific demographic or worldview?

3. The Hallucination Check: Highlight any specific claims of fact or data points within the text that seem unusually confident and require human verification.

Do not rewrite the text. Only provide the critique.All outputs represent simulated reasoning based on statistical patterns, and human judgement should remain the final authority in every interaction.

Remember the Billboard Test, i.e…

Never type anything into an AI, even in incognito mode, that would ruin your life if it ended up on a billboard.

By using a prompt like this, you force a pause. You introduce friction and slow down the process just enough to let human judgment re-enter the loop.

The leaders who win in the next decade won’t be the ones who adopted AI fastest. They will be the ones who adopted it smartest.

Critical AI literacy is the foundation of that smart adoption. It’s the difference between riding the wave and getting drowned by it.

This Slow AI approach takes a little more time upfront. It requires uncomfortable questions. But it builds a resilient foundation for a future where humans and machines collaborate, rather than collide.

Take the Next Step in Critical AI Leadership

This post provides an introduction to the Slow AI approach. A paid subscription to Slow AI provides access to the The Slow AI Curriculum for Critical Literacy. This twelve-month programme is for individuals who want to understand how to engage critically with AI rather than just use it to generate outputs.

The 2026 Handbook for Critical AI Literacy provides the necessary detail for those who wish to make an informed decision before subscribing.

If you join now, you join the founding cohort. That group will shape the tone and direction of the curriculum going forward.

AND Readers of this guest post can use this special link for 15% off an annual subscription.

Thanks, Sam, for the post and the discount!

Why This Matters for Your Leadership

The leaders who win over the next decade won’t be the ones who adopted AI fastest.

They’ll be the ones who adopted it smartest.

Critical AI literacy is the foundation of that smart adoption. It’s the difference between riding the wave and getting drowned by it.

And while this post introduces the Slow AI approach, developing this muscle takes sustained practice.

That’s why I’m genuinely excited about what Sam has built.

Join 3,500+ Leaders Implementing AI today…

Which Sounds Like You?

“I need systems, not just ideas” → Join Premium (Starting at $39/yr, $1,345+ value): Tested prompts, frameworks, direct coaching access. Start here

“I need this built for my context” → AI coaching, custom Second Brain setup, strategy audits. Message me or book a free call

“I want to reach these leaders” → Sponsor one post (short supply). 3,500+ executives. Check availability

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

This is a necessary distinction, Joel and Sam. The shift from functional to critical literacy is essentially the reclamation of the Reflection Gap. We have spent the last decade optimizing for speed, but as you’ve pointed out with the Air Canada case, speed without 'Sovereign Interrogation' creates massive systemic liability. Developing a 'BS Detector' isn’t just a leadership skill; it’s a survival protocol for maintaining human agency. Without that critical pause—what I call the Discipline of Slowness—we aren't leading AI; we are just accelerating the error rate of our organizations. Thank you for this framework.

When not to use AI, or only with light assistance, is the key. I found this to be true as a marketer working for an AI consultancy firm (drowned in 10x this and that, and AI-first approaches).

I pledge for human first, AI second, human third (verify output).