AI Ethics DeepDive: Impossible Ideal or Achievable Reality?

And a tool to help you get started: My AI Ethics Decision Tree...

Let’s stay connected—find me on LinkedIn and Medium to further the conversation.

Get a coffee, get comfortable, and join me in a deep dive on Ethical AI. After hearing so much about Ethical AI, I wanted to better understand and then present the intricacies of this phrase and what it means to us.

Picture this: You're watching a construction crew build a skyscraper. They're working at breakneck speed, adding floor after floor, racing to beat their competitors to market. The structure is impressive, sleek, powerful, reaching higher each day. But something's missing…. there are no safety railings, no emergency exits, no fire suppression systems.

"We'll add those later," the foreman says, "once we see how people use the building."

This is AI development in 2025.

A few weeks ago, I posed a simple question to my audience: "What does ethical AI mean to you?" The responses revealed something striking but expected. They exposed the fault lines in our approach to AI ethics.

The word cloud from these responses tells the story better than I could: transparency and accountability dominate the conversation. But look closer and you'll see the tensions. Words like "hallucinations" and "deception" sit alongside "guardrails" and "safety." Some responses focused on "agency" and "human" oversight, while others pointed to "power" and the risk of "harm."

Most telling? Several respondents used words like "oxymoron" and questioned whether ethical AI is even possible. One person simply wrote "bad" next to AI ethics, while another shared a sophisticated framework around "relationship" and "empathy." The diversity of these responses isn't just interesting, it's revealing the fundamental challenge we face.

But here's what struck me most: despite the variety of responses, nearly everyone shared an underlying anxiety. We sense we're building something powerful without adequate guardrails. We're deploying systems that will shape millions of lives, but we haven't agreed on who gets to decide what's right, what's safe, or what's wise.

This isn't a theoretical exercise anymore. Every day, leaders like you are making decisions about AI implementation that will ripple through your organizations and communities for years to come. The question isn't whether AI will transform how we work and lead, it's whether we'll shape that transformation intentionally or let it shape us by default.

That's why this conversation can't wait. This isn't about slowing down innovation; it's about building wisely while we still can. Because the guardrails we install today will determine whether AI becomes a tool for human flourishing or a force that undermines the very leadership principles we've spent our careers developing.

Here is what we’ll explore ahead:

I. The Ethics Conversation We Can't Postpone

II. The Power Problem: Who Decides What's Ethical?

III. The Delegation Trap: What We Must Never Hand Over

IV. Six Pillars of Ethical AI Implementation

V. The Implementation Gap: From Principles to Practice

VI. Navigating Trade-offs in Real Time

VII. Your Personal and Professional Compass

VIII. Building the Movement: Ethics as Competitive Advantage

IX. The Guardrails We Build Today

X. Reading Corner

Let’s begin!

The Ethics Conversation We Can't Postpone

The responses to my question weren't just opinions; they were symptoms of a deeper crisis. We're witnessing widespread anxiety about AI ethics, but this anxiety is revealing something critical: the gap between AI adoption and ethical frameworks isn't just growing, it's accelerating.

Consider the numbers. Global AI adoption has exploded, with 65% of organizations now using generative AI in at least one business function. Yet the World Economic Forum's 2024 research shows that only 16% of organizations are prepared for AI-enabled reinvention, and most lack mature governance frameworks for ethical AI deployment.

Meanwhile, public trust remains devastatingly low. Pew Research Center's 2024 survey found that only 25% of Americans believe AI will benefit them personally, while most want stronger oversight and regulation. This isn't just a public relations problem, it's a fundamental challenge to sustainable AI adoption.

The "move fast and break things" approach that defined early tech development has proven catastrophic when applied to systems that shape human lives. The Facebook-Cambridge Analytica scandal serves as a stark reminder: when we build powerful systems without adequate safeguards, the consequences ripple through democracy itself. 87 million users had their data harvested, leading to congressional hearings, massive fines, and ongoing questions about social media's role in democratic processes.

Real-World Success Stories: Ethics as Implementation

PATAGONIA:

While the headlines focus on AI failures, leading organizations are proving that ethical AI isn't just possible, it's profitable. Patagonia's Values-First AI Integration demonstrates how mission-driven companies can maintain their principles while leveraging technology. After facing ethical supply chain failures that forced them to rebuild their entire oversight system, Patagonia now applies the same rigorous approach to AI adoption. Their ethical AI framework ensures that any technology aligns with their mission to "save our home planet," focusing on supply chain impact prediction and sustainable materials research rather than pure efficiency gains.

AXA:

Similarly, AXA's Transparent Claims Processing shows how explainable AI builds trust through clarity. AXA has implemented explainable AI techniques across its claims processing, allowing both customers and staff to understand how AI reaches its decisions. Their system visually demonstrates the reasoning behind damage assessments and fraud detection, building trust through transparency rather than hiding behind black-box algorithms. This approach hasn't just prevented ethical issues, it's improved customer satisfaction and operational efficiency.

The timeline comparison is sobering. While AI investment reached $91.9 billion globally in 2022, comprehensive ethical frameworks lag years behind. The EU AI Act, the world's most comprehensive AI regulation, only began enforcement in 2024, while China and the US pursue more fragmented approaches that prioritize competitive advantage over comprehensive protection.

We can't afford to repeat the social media playbook with AI. The stakes are higher, the scale is larger, and the potential for both benefit and harm is exponentially greater.

II. The Power Problem: Who Decides What's Ethical?

The fundamental challenge of AI ethics isn't technical; to me, it's political.

Who gets to decide what constitutes ethical AI use?

And what happens when those making the decisions aren't the ones living with the consequences?

Corporate boardrooms across Silicon Valley and beyond are grappling with AI ethics decisions that will affect millions of lives. Yet Harvard Law School's research on AI governance reveals a troubling pattern: AI ethics decisions often rest with a small group of executives, frequently lacking diverse stakeholder input or representation from affected communities.

Impact-profit gap - Those who benefit most from AI development, shareholders, executives, and tech workers, are rarely those most vulnerable to its risks.

This power concentration creates what researchers call the "impact-profit gap." Those who benefit most from AI development, shareholders, executives, and tech workers, are rarely those most vulnerable to its risks.

When Amazon's hiring algorithm showed bias against women, or when facial recognition systems demonstrated higher error rates for people of color, the people making deployment decisions weren't the ones facing discrimination.

The international governance landscape reveals similar power dynamics. The EU AI Act sets comprehensive standards for fairness, transparency, and accountability. Meanwhile, the US approach remains fragmented across agencies and industries, while China prioritizes AI development for competitive advantage. This creates a global race where ethical standards become secondary to market dominance.

Perhaps most concerning is the rise of "ethics washing", companies adopting ethical frameworks for public relations while failing to implement meaningful oversight. MIT Technology Review's analysis of corporate AI ethics initiatives found that many companies establish ethics boards and principles but lack enforcement mechanisms or transparency in their application.

Ethics washing: companies adopting ethical frameworks for public relations while failing to implement meaningful oversight.

The antidote to concentrated power isn't paralysis, it's intentional stakeholder engagement. Organizations successfully implementing ethical AI create diverse oversight structures, include affected communities in decision-making processes, and build transparency into their governance systems.

III. The Delegation Trap: What We Must Never Hand Over

The most dangerous assumption in AI deployment isn't technical; it's psychological. We assume we'll maintain human agency while increasingly delegating critical decisions to algorithmic systems. Research in automation psychology reveals a troubling pattern:

The more we rely on AI recommendations, the less capable we become of independent judgment.

Studies on automation bias show that humans consistently over-trust AI recommendations, even when those recommendations are demonstrably wrong. In high-stakes domains like criminal justice, healthcare, and hiring, this over-reliance has led to systematic errors that disproportionately harm vulnerable populations.

The COMPAS criminal risk assessment tool provides a sobering case study. Despite being used in courts across America, ProPublica's investigation revealed that the algorithm was twice as likely to incorrectly flag Black defendants as high-risk compared to white defendants. Yet judges continued to rely heavily on these scores, delegating moral and legal judgment to a system with embedded bias.

Healthcare presents even starker examples. When IBM's Watson for Oncology was deployed globally, it recommended inappropriate and sometimes dangerous treatments because it was trained on a limited dataset from a single hospital. Yet oncologists in multiple countries began deferring to its recommendations, eroding their own diagnostic skills.

From a theological perspective, this delegation represents an abdication of the responsibility for moral reasoning that defines human dignity. We are called to exercise discernment, wisdom, and compassion in decisions that affect human flourishing. When we delegate these capabilities to systems that lack consciousness, empathy, or moral understanding, we diminish not just our own humanity but our capacity to serve others effectively.

The solution isn't to avoid AI, it's to maintain what researchers call "meaningful human control." This means identifying decisions that must remain human, building systems that enhance rather than replace human judgment, and continuously developing our own capabilities alongside our AI tools.

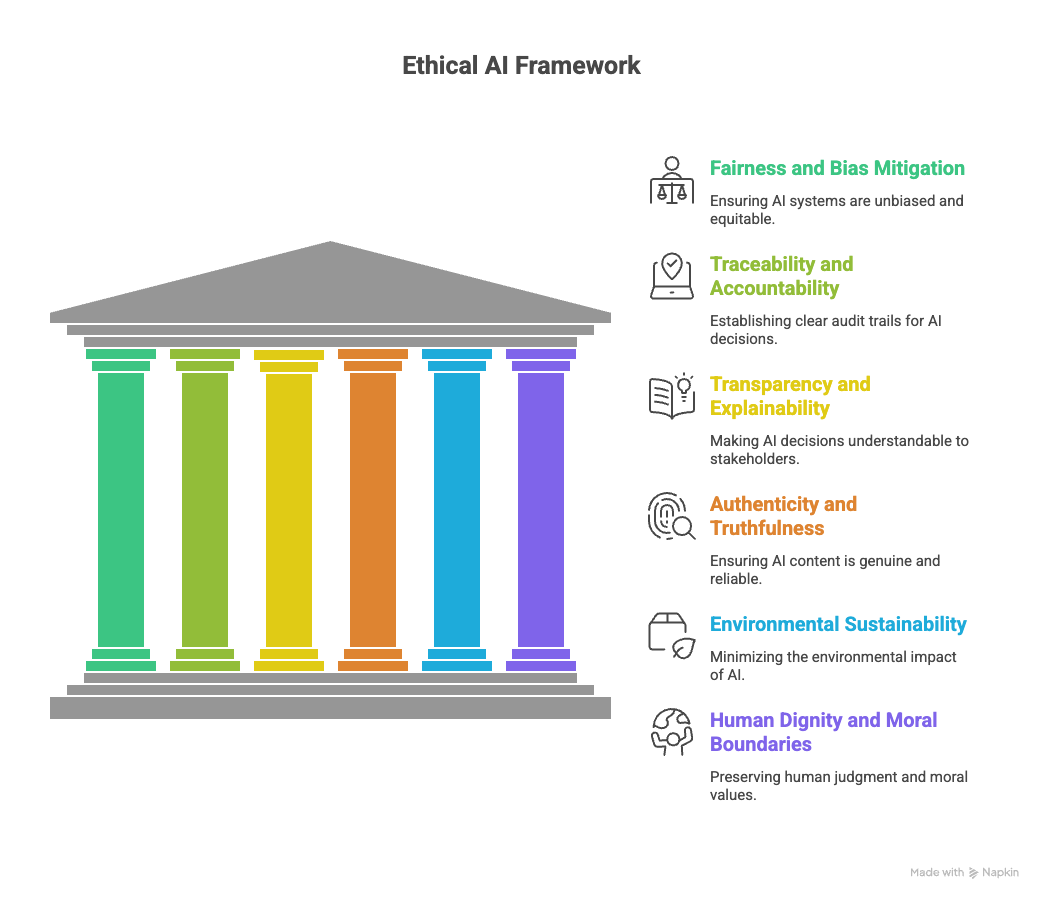

IV. Six Pillars of Ethical AI Implementation

After studying organizations that successfully navigate AI ethics, I've identified six practical pillars that transform abstract principles into measurable reality. These aren't theoretical ideals; they're working frameworks used by leaders who refuse to choose between innovation and integrity.

Pillar 1: Fairness and Bias Mitigation

Fairness in AI isn't about perfect algorithms; it's about systematic processes for detecting and correcting bias before it scales. The COMPAS criminal justice algorithm and Amazon's hiring tool failures teach us that bias isn't a bug to be fixed once, but an ongoing challenge requiring continuous vigilance.

Key Implementation: Before deploying any AI system, establish baseline fairness metrics. Test across relevant demographic categories. Build correction mechanisms into your deployment pipeline. Train your team to recognize where bias enters data and decisions.

Pillar 2: Traceability and Accountability

When AI systems make decisions that affect human lives, you need clear audit trails. The EU AI Act now requires comprehensive logging for high-risk AI applications, including decision criteria, data sources, and human oversight points.

This isn't just about compliance, it's about learning and improvement. Organizations using tools like ModelDB and DVC (Data Version Control) can trace any AI decision back to its training data, model version, and approval process.

Key Implementation: Document model versions, training data sources, and decision criteria. Create clear ownership structures for AI outcomes. Build audit trails that survive leadership transitions and staff changes.

Pillar 3: Transparency and Explainability

Your stakeholders deserve to understand how AI affects them. This doesn't mean sharing proprietary algorithms, it means clear communication about what AI does, how it works, and what safeguards protect their interests.

Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) make complex AI decisions understandable to non-technical stakeholders. The GDPR's "right to explanation" has pushed European organizations toward more explainable AI approaches.

Key Implementation: Create plain-language explanations of your AI systems. Build explainability requirements into vendor contracts. Train your communication team to discuss AI honestly and accessibly.

Pillar 4: Authenticity and Truthfulness

In an era of deepfakes and hallucinating chatbots, authenticity isn't just nice to have; it's essential for trust. Deepfake technology can now create convincing fake videos with minimal technical expertise, while large language models regularly produce confident-sounding but factually incorrect information.

Leading organizations implement AI content labeling standards, build safeguards against misinformation, and create rapid correction processes for AI errors. The psychological impact studies show that unlabeled AI content erodes trust even when the content is accurate.

Key Implementation: Label AI-generated content clearly. Build safeguards against misinformation in your AI outputs. Create processes for correcting AI errors quickly and transparently.

Pillar 5: Environmental Sustainability

AI training consumes enormous amounts of energy. Training GPT-3 produced an estimated 552 tons of CO2, equivalent to the lifetime emissions of several cars. As AI systems grow larger and more complex, their environmental impact grows exponentially.

Green AI practices include using efficient architectures, renewable energy sources, and carbon accounting tools. Organizations like Google and Microsoft now publish detailed carbon footprint reports for their AI operations and commit to carbon-neutral AI training.

Key Implementation: Understand the carbon footprint of your AI systems. Choose efficient architectures over pure performance. Look to partner with vendors who prioritize green AI practices.

Pillar 6: Human Dignity and Moral Boundaries

Some decisions should never be fully automated. Some human experiences shouldn't be replaced by algorithms. This pillar is about identifying the red lines where human judgment, creativity, and relationship remain non-negotiable.

The WISE framework (Wellbeing, Integrity, Social benefit, Excellence) provides a structure for dignity impact assessments. Organizations using this approach evaluate not just whether AI can replace human tasks, but whether it should.

Key Implementation: Define what AI can and cannot do in your organization. Maintain human oversight for high-stakes decisions. Protect spaces for human creativity, connection, and moral reasoning.

V. The Implementation Gap: From Principles to Practice

The gap between AI ethics principles and actual implementation represents one of the greatest leadership challenges of our time.

Microsoft's Responsible AI case study reveals a sobering truth: organizations that successfully implement ethical AI invest 3-4 times more in training, processes, and governance than those that simply adopt ethical principles.

The implementation gap exists at multiple levels. Deloitte's research on the ROI of ethical AI found that while 91% of organizations using AI have established AI ethics principles, only 34% have implemented systematic processes to enforce them. This creates what researchers call "ethics theater,” impressive frameworks that don't translate into changed behavior.

Ethics theater - impressive frameworks that don't translate into changed behavior.

Instead, here are a few ways to actually get progress…

Personal AI Ethics Audit Checklist: Start with what you control. Audit your own AI usage across tools, decisions, and workflows. Document where AI influences your judgment. Identify potential bias points in your data and processes. Assess the transparency of AI tools you're already using.

Organizational Assessment Framework: Move beyond principles to measurement. Establish baseline metrics for each of the six pillars. Create stakeholder feedback mechanisms. Build ethics considerations into procurement processes. Develop incident response procedures for AI-related issues.

Vendor Evaluation Criteria: Don't assume vendors have handled ethics for you. Require transparency about training data sources. Demand explainability for high-stakes decisions. Evaluate bias testing and mitigation practices. Assess environmental impact and sustainability commitments.

Building Ethics-Aware Teams: Ethical AI isn't just an IT responsibility. Train leaders to recognize ethical implications. Empower staff to raise concerns without retaliation. Create cross-functional ethics review teams. Integrate ethics considerations into performance evaluations.

VI. Navigating Trade-offs in Real Time

Ethical AI isn't about following rules; it's about making wise decisions under uncertainty.

Every AI implementation involves trade-offs between competing values:

speed vs. safety

innovation vs. precaution

efficiency vs. equity

individual benefit vs. collective good.

The speed vs. safety tension is particularly acute for mission-driven organizations. When lives are at stake, whether through humanitarian aid delivery, healthcare access, or educational opportunity, the cost of waiting can be measured in human suffering. Yet the cost of moving too quickly can be measured in the same terms.

Consider the COVID-19 contact tracing applications deployed worldwide. South Korea's aggressive AI-powered contact tracing helped control the early spread but raised significant privacy concerns. Meanwhile, privacy-focused approaches in Europe achieved better compliance but potentially less effective contact tracing. Neither approach was clearly "right"—both involved trade-offs between competing ethical values.

Decision science research provides frameworks for navigating these trade-offs systematically. Here is a Stanford study-inspired framework for making these kinds of decisions. Try it out!

Speed vs. Safety: Build rapid testing and feedback loops rather than choosing between speed and safety. Deploy AI systems in limited, controlled environments before scaling. Create "escape hatches" that allow quick reversal of AI decisions.

Innovation vs. Precaution: Use staged deployment approaches that balance innovation with risk management. Invest in robust testing and monitoring systems. Build stakeholder input into innovation processes from the beginning.

Efficiency vs. Equity: Recognize that short-term efficiency gains may create long-term equity problems. Include equity metrics in efficiency calculations. Design systems that improve outcomes for everyone, not just average outcomes.

Individual vs. Collective Benefit: Use transparent decision-making processes that acknowledge trade-offs. Create mechanisms for affected individuals to understand and appeal AI decisions. Build systems that optimize for shared flourishing, not just aggregate utility.

VII. Your Personal and Professional Compass

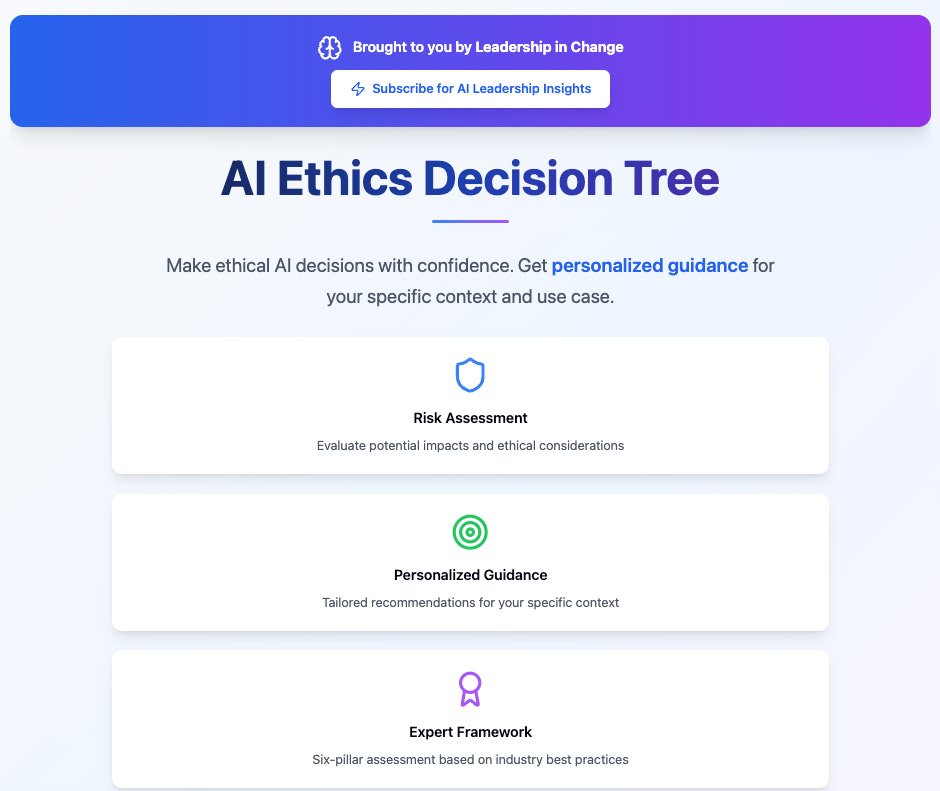

The gap between knowing ethical AI principles and applying them in daily decisions is where most leaders struggle. Generic checklists fail because they don't account for your specific context or the stakes involved in your particular AI decision.

That's why I've developed the AI Ethics Decision Tree – an interactive assessment tool that transforms abstract ethical frameworks into personalized, actionable guidance for real-world decisions.

Why You Need This Tool

When you're evaluating an AI system at 4 PM on a Tuesday, you need clear guidance that considers:

Your specific role and responsibilities

The stakes level of your decision

Your organization's unique values and context

How It Works

The tool guides you through a systematic assessment that:

Adapts questions based on whether you're a creator, entrepreneur, nonprofit leader, or corporate executive

Evaluates risk levels from low-stakes productivity tools to high-impact systems affecting livelihoods

Assesses your AI system against all six pillars of ethical implementation

Provides specific, actionable recommendations and monitoring requirements

Use it every time you're evaluating a new AI system. The few minutes you invest in systematic assessment can prevent months of dealing with unintended consequences.

VIII. Building the Movement: Ethics as Competitive Advantage

The most successful AI implementations aren't just ethical, they're more sustainable, more trusted, and more successful than their "move fast and break things" competitors. Market research consistently shows that consumers, employees, and investors increasingly prefer organizations with strong ethical AI practices.

The business case for ethical AI leadership is compelling. Organizations with robust ethical frameworks experience 23% higher employee retention, 19% better customer satisfaction, and 15% stronger financial performance compared to those without such frameworks. This isn't correlation, it's the result of building systems that stakeholders trust and support.

Long-term Sustainability vs. Short-term Gains: The social media industry's experience demonstrates the cost of prioritizing short-term engagement over long-term social impact. Facebook's stock price dropped 20% following the Cambridge Analytica scandal, and ongoing regulatory scrutiny continues to create business uncertainty.

Leading Change in Your Sphere of Influence: Start where you are, with what you have. Model ethical AI decision-making in your own organization. Share lessons learned with peers and networks. Participate in industry conversations about standards and best practices. Support policies that promote ethical AI development.

The network effects research shows that ethical practices spread through professional and social networks more rapidly than regulatory requirements. When respected leaders adopt ethical AI practices, their peers are more likely to follow, creating positive cycles of influence.

IX. The Guardrails We Build Today

The construction site metaphor that opened this article isn't just about AI development; it's about leadership.

Every day, leaders like you decide whether to install safety systems before problems emerge or to "add them later" after the damage is done.

The guardrails we build today will determine whether AI becomes a tool for human flourishing or a force that undermines the leadership principles we've spent our careers developing. This isn't a theoretical choice; it's a practical decision that affects real people in real time.

The AI revolution is happening with or without ethical guardrails. The question is whether leaders like you will shape that revolution intentionally or let it shape us by default. Every AI decision you make is a vote for the kind of future you want to create.

X. Reading Corner:

If you are interested in safe and expert AI use to maximize your impact as a leader, here are a few posts you want to check out…

Michael Simmons’s 10,000x Knowledge worker: How History’s Forgotten Productivity Secret Reveals AI’s True Potential

Claudia Faith’s The New Era of Business with AI Is Here — And It’s Built for Writers, Creators, and Solopreneurs

Johanna Dorris’s We Didn’t Train AI to Care. We Taught It What Care Looks Like.

Jose Antonio Morales’s The Fear of Collective Self-Destruction

If You Only Remember This:

• AI ethics isn't impossible; it's practical leadership applied to powerful tools

• The six pillars provide a systematic approach to implementation, not just principles

• Ethical AI creates competitive advantages through stakeholder trust and sustainable practices

• The guardrails we build today determine whether AI serves human flourishing or self-interest

• Every leader has the opportunity to shape AI's development through intentional decision-making

Agree? Disagree? Did I miss something crucial? Let me know! Comment below.

Your framing of AI development without ethical "guardrails" is spot on—and your Decision Tree tool is a great practical starting point. We need more voices bringing clarity and urgency to this conversation.

Really appreciate this deep approach to AI ethics, Joel. Your list is so thorough!

It’s true, high‑level principles can feel noble, but the real challenge is in applying them practically.