AI Hallucinations and My Framework to Catch Them

The 2-Minute Framework That Catches Errors Before They Damage Years of Trust-Building

Thanks for reading! To access our community, ChatGPT Agent prompt library, coaching, and AI tools saving leaders 5-10 hours per week, check out our Premium Hub.

LinkedIn / Community Chat / Email /

Have you ever followed your GPS straight to an empty parking lot?

Last month, I drove pretty far to a restaurant… that no longer existed. But the GPS? Completely confident. Turn left. Proceed 500 feet. You have arrived at your destination. An empty lot.

Your AI tools do the same thing. They’ll cite studies that don’t exist, quote experts who never said those words, and generate statistics that sound perfect because they are perfect. Perfectly made up.

The difference? When GPS fails, you disappoint your family for one night. When AI hallucinations make it into your leadership communications, you damage years of trust.

That empty parking lot? That’s an AI hallucination. And when one slips into your decision-making or public communication, you’re not just embarrassing yourself. You’re potentially undermining your credibility with your board, your team, or your donors.

AI hallucinations are when these tools make stuff up but present it with the same confidence as real information. Think of it like your GPS confidently directing you to that empty lot. The AI isn’t trying to lie. It’s predicting word patterns, not checking facts. It’s a sophisticated pattern-matcher, not a fact-checker.

And this costs real money. Air Canada lost a court case after their chatbot hallucinated a refund policy. The customer followed the AI’s advice. Air Canada said the policy didn’t exist. The court ruled in favor of the customer. One hallucination, thousands in damages.

How do you avoid this? Make sure you have a verification system. You don’t need complex systems or IT departments. You need three questions and two minutes.

In this article, you’ll discover:

The three verification mistakes that turn AI assistance into reputation risk

A 2-minute framework that catches errors before they reach your audience

A practical starting point you can implement this week

Mistake #1: Not Recognizing That AI Lies With Complete Confidence

First mistake? Thinking AI tells intentional lies. It doesn’t. But it lies with complete confidence, which is worse.

I can picture how easily a nonprofit director could include AI-generated grant statistics in a major foundation proposal. The foundation fact-checks, and the grant is lost. And not only could you lose a grant, but one hallucination could tarnish your organization’s entire reputation.

The most dangerous AI error is the one that sounds completely reasonable.

Here’s what keeps me up at night sometimes: confirmation hallucinations. These are AI errors that confirm what you already believe. They’re the hardest to catch because they feel right. When AI tells you, “Studies show 85% of leaders agree with your approach,” your brain wants to believe it. That’s when verification matters most.

I almost published an article with a fabricated statistic last month. The number was perfect. It supported my argument beautifully. That’s exactly why I double-checked it. Good thing, because it didn’t exist.

Here’s my approach: I take any statistic and search it independently. For example, if ChatGPT tells me “73% of nonprofits saw increased donations after implementing AI tools,” I use a research-focused AI tool like Perplexity (which provides actual citations and links to source material) to search “nonprofit AI adoption donation statistics” to see if this number exists in real research.

The fix? Simple. Never present AI-generated statistics without this 30-second verification step. Ever.

Mistake #2: Treating Every AI Interaction the Same

The second mistake is treating all AI interactions the same, whether you’re drafting a casual email or making a policy decision affecting your entire organization.

A staffer recently used AI to find precedent cases for a firm’s case. The lawyer saw it, the staffer thought it was harmless, but the cases were made up. The staffer was fired, and the firm’s reputation was destroyed.

Not all AI outputs carry the same risk. Your verification should match your stakes.

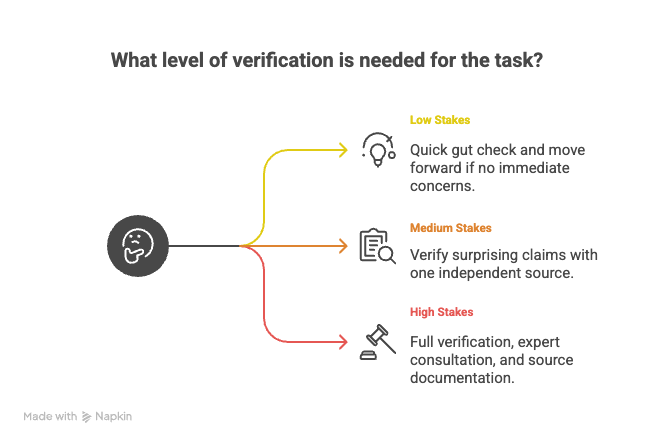

Here’s how I think about it:

Low Stakes (internal emails, brainstorming): Quick gut check. If something seems off, verify. Otherwise, move forward. (30 seconds)

Medium Stakes (external communications, team decisions): Always verify surprising claims with at least one independent source. (2-3 minutes)

High Stakes (policy changes, public statements, grant proposals): Full verification plus expert consultation. Document your sources. (15-30 minutes)

Use this chart to remember:

Match your verification intensity to the potential damage if you’re wrong.

When you’re making high-stakes calls that could affect your entire organization, invest 15-30 minutes. Your reputation took years to build. An AI hallucination can damage it in seconds.

Mistake #3: Random Verification (Sometimes You Check, Sometimes You Don’t)

The third mistake is inconsistent verification. Sometimes you check AI output, sometimes you don’t, depending on how busy you are.

Inconsistent verification is almost worse than no verification, because it creates false confidence.

A startup founder told me he “usually” fact-checks AI responses. Usually meant when he remembered. When he had time. When something seemed obviously wrong. This is like jogging on thin ice, uncaring, but risking everything with every next step.

You need a system, not good intentions.

After a year of testing dozens of verification methods, I found most took too long or were too complicated for my team to actually use. This one stuck because it takes under two minutes and has caught every major error before it reached my audience.

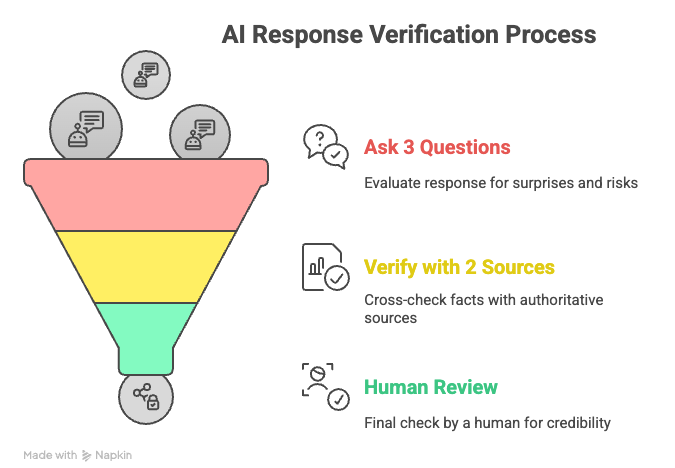

The 3-2-1 Rule: Your Verification System

Here’s the framework that addresses all three mistakes. I call it the 3-2-1 Rule.

Here’s how I remember it:

3 Questions = RED LIGHT (stop and think)

2 Sources = YELLOW LIGHT (verify before proceeding)

1 Human = GREEN LIGHT (safe to share)

Let me break it down:

3 Questions I Ask About Every AI Response:

Is this surprising or too convenient?

Can I verify the most important claims quickly?

What happens if this information is wrong?

These three questions take 15 seconds. They catch 80% of potential problems before you waste time on full verification.

2 Sources for Any Facts That Matter:

I use Perplexity AI (a research-focused tool that provides actual citations) or, recently, Claude, to verify statistics or studies. Then I cross-check surprising information with the URL of the original source.

This is where you match verification intensity to your stakes. Internal email? Maybe skip this unless the 3 questions raised red flags. Board presentation? Always do this. Grant proposal? Do this plus the next step.

1 Human Review for High-Stakes Content:

Someone else reads it before it goes public. For internal decisions, I ask myself: “Would I bet my reputation on this?”

The human review catches what the questions miss. Because AI doesn’t have credibility to lose. You do.

Can You Spot the Hallucination?

Let’s practice. Three statements below. Two are verified. One is AI-generated fiction:

67% of nonprofits reported AI reduced administrative workload by 40%

Leaders using AI spend an average of 8 hours per week on verification

Organizations with formal AI policies saw 52% fewer credibility incidents

Take a moment.

Which one feels off? Answer at the bottom of the post.

The 3-2-1 Rule in Real Time

Let me show you how this works with a real example.

AI tells me: “Studies show that 85% of church leaders plan to implement AI tools in the next year.”

3 Questions:

This seems high and very specific. (Red flag #1)

Can I find this study? (Need to check)

If I’m wrong, I look uninformed to church leaders. (High stakes)

2 Sources:

I search Perplexity: “church leaders AI adoption statistics 2024”

I find surveys from Barna Group and Hartford Institute showing 40-60% interest, not 85% commitment

The AI hallucinated a more dramatic number

1 Human:

I mention the discrepancy to a colleague who tracks church tech trends

He confirms the 40-60% range and shares two additional sources

Result: I use the verified 40-60% range instead of the fabricated 85%. My credibility stays intact.

Total time? Three minutes. Potential damage avoided? Significant.

Building Your 3-2-1 Habit

Verification isn’t paranoia. It’s the new professional standard.

Start this week by applying the 3-2-1 Rule to just one AI interaction daily. After two weeks, it becomes automatic.

Week 1: Practice the 3 questions on every AI interaction

Week 2: Add the 2-source verification for any facts you plan to share

Week 3: Implement the 1-human review for external communications

Week 4: Teach the system to one other person

By week four, you’re not just protecting yourself. You’re building organizational muscle memory.

Now, if you are looking to go further, use the resources and guides I created in the Premium Member Hub. These resources can save you 5-10 hours per week and hundreds of $ per week if used correctly.

If You Only Remember This:

AI doesn’t lie on purpose, but it lies with confidence. The 3 questions catch this before it reaches your audience

Match verification intensity to decision stakes. Casual emails need different checks than policy decisions

Make verification systematic, not random. Inconsistent checking creates false security

The most dangerous AI errors sound completely reasonable. That’s why the 3-2-1 Rule works

Is this something you’ll try? Or do you have another verification system you like to use?

Answer to the quiz:

Actually, all 3 are made up, but they all sound great. Don’t they?

PS: Many subscribers get their Premium membership reimbursed through their company’s professional development $. Use this template to request yours.

Let’s Connect

I love connecting with people. Please use the following connect, collaborate, if you have an idea, or just want to engage further:

LinkedIn / Community Chat / Email / Medium

But the question arises is why Ai hallucinates? Not one particular AI LLM model but all of them and all versions?

if we are to Police the output of these AI models for every piece of content the AI generates for publication we end up wasting time instead of saving time which is key value proposition that the use AI offers as a promise.

AI doesn’t lie; it hallucinates confidently. The 3-2-1 Rule is a simple, practical framework leaders can use to catch errors before they damage trust. Must-read for anyone relying on AI in decision-making.